Having gone through the vision and key architectural aspects of ArduPilot in our previous essays, we will focus in this post on the software quality. Initially we look at the continuous integration and test processes. Then we examine recent coding activities and their alignment with the roadmap. Finally, we assess the code quality and maintainability, as well as the technical debt.

Continuous Integration: ArduPilot’s Journey

Continuous Integration (CI) is a way of developing a software application requiring the developers to continuously integrate their updated code in a shared repository several times during a certain predetermined period of time (could be several times a day, etc.). Pull Requests are thus verified by an automatic build, allowing teams to figure out and detect problems early on, and locate them more easily.

Concerning ArduPilot, the developers follow a certain set of practices and processes ensuring the continuous integration of ArduPilot, such as maintaining a single source repository on GitHub, automating the build, testing and deploying process, testing in a different branch than the master, and ensuring the latest version of the code is the one being worked on. A large part of these steps are automated using the Travis CI, Semaphore CI, AutoTest ArduPilot and Azure DevOps, which are launched automatically at each pull request.

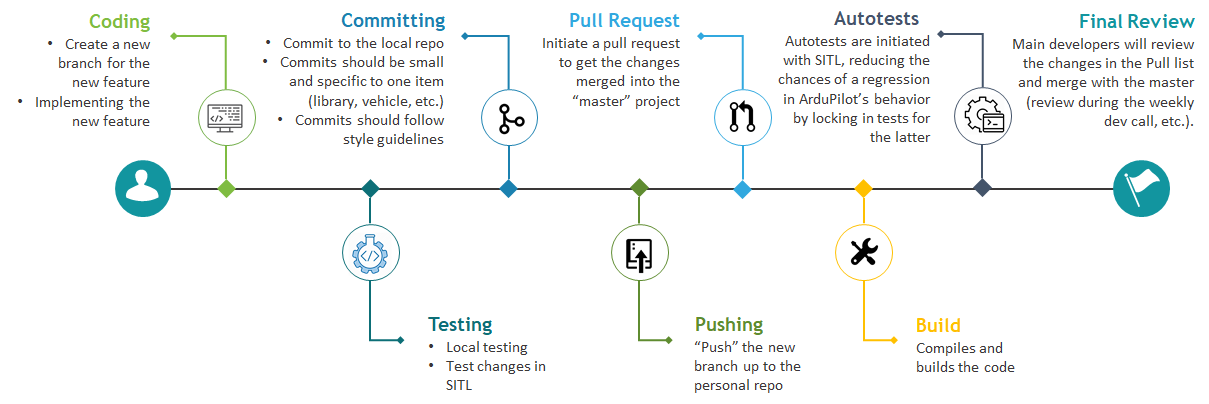

The latter procedures are maintained by ensuring that developers follow the practices below before submitting patches to the Master (consists of building and testing ArduPilot):

Pre-flight Checks: ArduPilot’s Test Process

As mentioned in our previous essay, the test process for ArduPilot is in summary1:

- Alpha Testing: Run by AutoTest

- After most commits done to the project’s master branch, AutoTest runs standard tests in a simulation.

- Beta Testing: Beta testers conduct tests in simulations or on hardware.

- Beta versions are defined, released, and announced by developers (example).

- Beta testers report bugs.

- After weeks of testing, developers discuss the go-no-go decision for a stable release on development calls

- Stable Releases: Prepared versions are released and announced.

- Forums are used for further bug reports and related testing discussion.

Simulating for Success

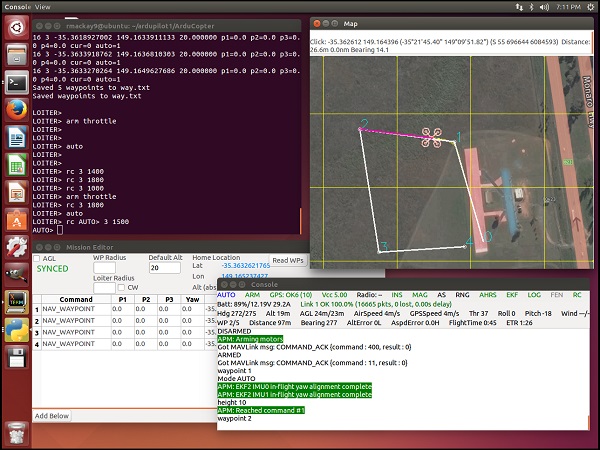

ArduPilot’s “Software in the Loop (SITL)” Simulator is an integral part of its testing process. It is a build of the autopilot/flight code that uses an ordinary C++ compiler and offers several debugging tools. When ArduPilot is running in SITL, the sensor data comes from a model of the craft in a flight simulator, while the autopilot treats it the same as real world data2.

SITL can simulate many vehicles and devices including multi-rotor or fixed wing aircraft, ground vehicles, underwater vehicles, support devices like antenna trackers, and optional sensors. In addition, new simulated vehicle types or sensors can be added by following the steps2.

ArduPilot’s versatile design makes it indifferent to the platform used. This includes virtual platforms in SITL such that ArduPilot behaves in a simulated environment exactly as it would in the real world 2. This eliminates most issues about reproducing bugs or about the software behaving differently in different environments. Hence successful simulation tests, with sufficient coverage of the code and use cases, can be considered as credible and reliable.

AutoTest-ing

The AutoTest suite is an ArduPilot tool that is used to quickly create and run repeatable tests in SITL. It saves developers time that would be taken for manually preparing and running complex tests. This allows running several tests with small, controlled differences in order to isolate conditions causing bugs. Furthermore, the bug-causing conditions can be made into a repeatable test. The prepared test can be sent to developers to work on fixing that specific bug. Hence “test-driven-development” proceeds3.

Apart from being used locally, the AutoTest suite runs automatically on ArduPilot’s AutoTest server, which automatically tests most commits made to the master branch with respect to 28 standard tests for catching the most frequent bugs. Debugging aside, developers are also encouraged to prepare or use the repeatable tests to clearly demonstrate how their patches make a difference in flight behavior3.

Developer Testing

During the Beta testing, and in response to bug reports after releases, developers of the community use both simulators and real vehicles for testing. Effective communication between developers is made possible via public forums (such as the ArduPilot forums and GitHub issues page) where issues and the work done about them can be logged and tracked easily. Furthermore, the developer chat page and the weekly development call provide an environments for real time discussions of bugs by senior developers4. Hence selected bugs can be identified, addressed, and solved efficiently by the community.

Code Coverage

To analyze code coverage, ArduPilot developers use the open source tool LCOV, an extension of GCC’s GCOV 5. The tool reports how many times each line of the code in the repository was executed in a given test. It can be used to verify whether the intended executions happened. ArduPilot developers use it for some tests (but not all) and keep the generated reports online. This way, they are able to verify sufficient code coverage of successful tests.

Architectural Activity: Diving Into ArduPilot’s Code History

Over the past years, people have worked on different components within ArduPilot. In this section we look at their recent merge history.

Recent Focus

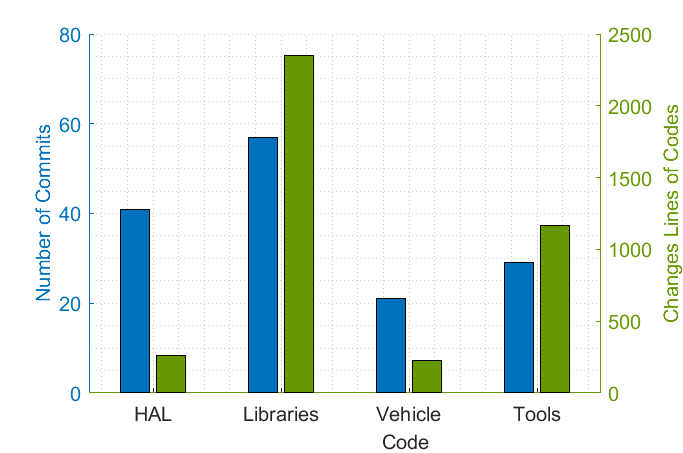

We mentioned in our last essay that the code structure has 3 types of modules: vehicle modules, shared libraries, and hardware abstraction layer modules (HAL). Digging into each individual commit results in the figure shown below. By far, the most commits are done on the libraries and the fewest are done on vehicle codes. At the end of last year, ArduPilot introduced experimental support for Lua scripting 6 7. Only recently they included the math and string library for Lua, which explains the high number of code changes. Also included in the figure is Tools. Lately, there have been many contributions to the AutoTest, which explains the high number of code changes. Interestingly, the HAL has more commits than Tools, but fewer changes in code, which indicates that the HAL module is worked on actively, but only small changes are made each time.

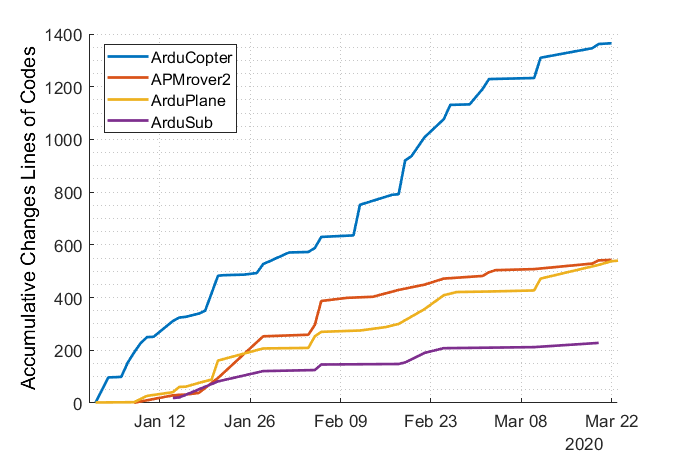

A further look into the different vehicle codes show that ArduCopter is the most active in terms of code changes, and the least active is ArduSub.

Implementing the Roadmap

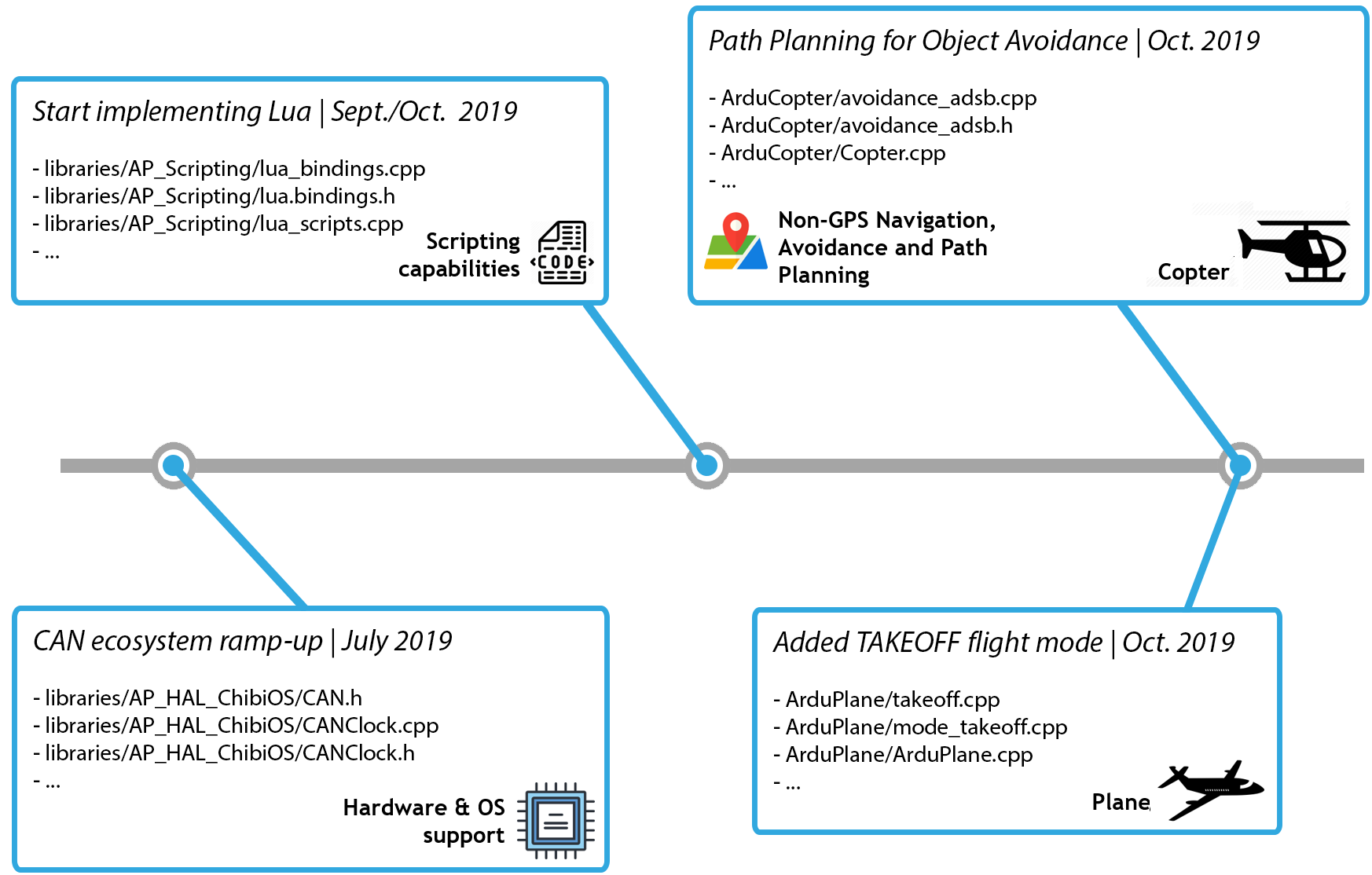

ArduPilot has a roadmap of features they want to add for 2019 and beyond. An overview of some of the implemented features in the release versions are shown in the figure below.

The figure shows 4 features that are implemented. As the features do not consist of only a single function, but rather quite a lot of code and files, only some files are showed that are related to these features. As can be seen, the CAN ecosystem ramp-up mainly focuses on the HAL module, the implementation of Lua is part of the shared libraries, and both features for the copter and plane are part of the vehicle code module.

Does the Code Look Any Good?

Code quality is arguably one of the most important aspects of a successful project 8. It goes hand in hand with qualities like reliability, maintainability, and efficiency. With approximately 40.000 lines of code, ArduPilot can be considered a relatively large project. And for large projects, we could say that maintainability is extra important. Think about all the extra effort that has to go into modifying/adding features or fixing bugs.

After analyzing the code in the ArduPilot project, we concluded that the developers struggle with two things concerning maintainability: unit size and complexity. In other words, methods are overly large and overly complex, indicating that they might not all have just one responsibility. This makes it difficult for the developers to pinpoint exactly where bugs happen or where to start creating new features. Since the main components are where most of the changes in code happen, this can cause a lot of issues.

In our first pull request for this course, we have addressed the unit size issue for one of the APMRover2 files. A method that was over 300 lines long, has now been separated into smaller methods, of which each has its own responsibility. Arguably some of the newly introduced methods could have been smaller. However, we chose not to do so because it would split the method’s responsibility over multiple methods. Contributors quickly responded to the pull request, one of them saying:

khancyr: I like the idea as it will be simplier to deal with those functions (and use a static analyzer).

From this response we can conclude that (some of) the developers might not have had maintainability in mind at all times while writing the code, but that their eyes have been opened.

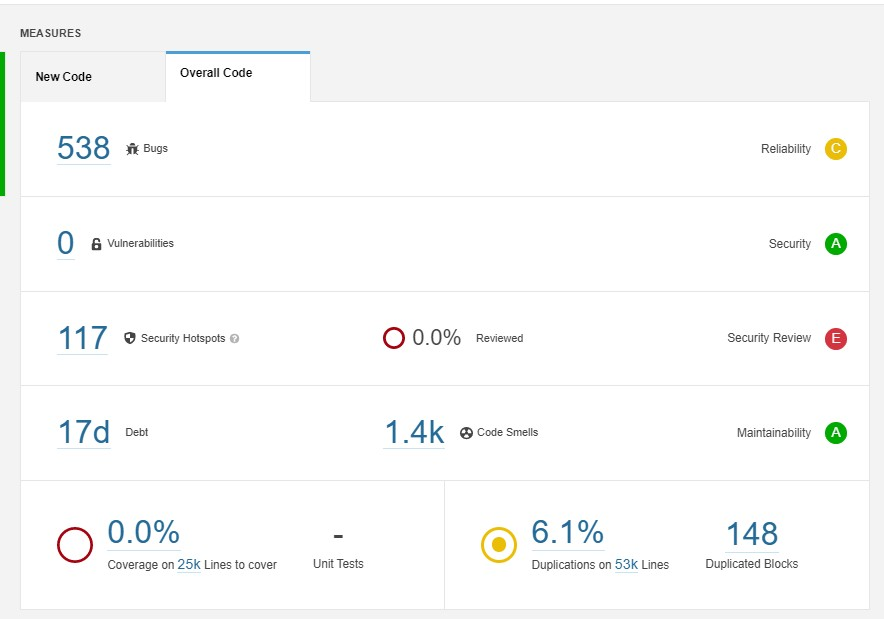

Technical Debt: What the Numbers Say

Technical debt is a measure quantifying the consequences of having deliberately done “something wrong” in order to quickly deliver a product to market, focusing on short term benefit rather than a sustainable design strategy. In this section, we will analyze the technical debt within ArduPilot in terms of the identified parameters, tools, and methods used. We used SonarQube’s 9 community version, which assesses HTML, CSS, and python files. However, much of ArduPilot’s code is in C++ and the latter’s analysis is supported only in the paid version of SonarQube. The assessment is given below.

We also used the static analysis from SIG 10 to analyze ArduPilot’s debt. For each of the metrics identified below, we followed the “SIG/TUViT method” and identified thresholds allowing us to qualitatively address them 11 12 13.

Code Duplication

Measures the degree of duplication in ArduPilot’s code. SIG provided a score of 3.1/5.0 on this metric. Analyzing the results per file, we notice that the average percentage of duplication is of 12% for the different vehicles codes. Putting this into perspective, we find it appropriate to lower the weight of this metric in the debt calculation, as it is dependent upon the specific architecture of ArduPilot. The latter caters for a large variety of board models which may be rather similar requiring a somewhat similar code and common modes.

Unit Size and Complexity

Relates to the distribution of the number of lines of code over the units of the source code, and to the complexity of the system. Using SIG, Unit size is given a score of 1.7/5. This makes the code harder to understand and more difficult for developers to identify issues or areas of the code they need to modify, leading to a moderate to high debt. As for the complexity, analyzing the McCabe index from the Files view of SIG, we notice that nearly 25% of the objects have a very complex architecture, making it harder to test and maintain.

Module Coupling and Dependency Analysis

Quantifies the chance that a change of the code in one place propagates to other places in the system. This negatively impacts the modifiability of the source code, along with its modularity and testability. Major issues appear to be in the ArduCopter mode.cpp, the libraries files, and the system.cpp class of ArduSub and AntennaTracker.

Testing Coverage

In the latest LCOV report, about 36% of the functions and 45% of the lines were not covered in the tests14. This suggests incomplete test coverage, although key files like Copter.cpp had a proper coverage. Incomplete coverage risks the quality of the project because uncovered code can break behavior and cause regression issues without the error sources being properly identified.

Therefore…

Based on the above insights we got from SIG, and on our analysis, we notice that most of the issues originate from the libraries which are being called and used by the different vehicles and interfaces, and are probably accounting for most of the technical debt in the system. The major debt seems to be specifically originating from large unit sizes and complex modules.

-

https://ardupilot.org/dev/docs/release-procedures.html?highlight=release ↩

-

https://ardupilot.org/dev/docs/sitl-simulator-software-in-the-loop.html ↩ ↩2 ↩3

-

https://ardupilot.org/dev/docs/the-ardupilot-autotest-framework.html ↩ ↩2

-

https://ardupilot.org/ardupilot/docs/common-contact-us.html ↩

-

https://github.com/ArduPilot/ardupilot/blob/194998d6314082f7d51a64d7fc967316ad766a05/Tools/scripts/run-coverage.sh ↩

-

https://github.com/ArduPilot/ardupilot/blob/master/ArduPlane/release-notes.txt ↩

-

https://ardupilot.org/copter/docs/common-lua-scripts.html ↩

-

https://medium.com/emblatech/the-importance-of-code-quality-ac7afa598c0d ↩

-

https://www.sonarqube.org/ ↩

-

https://sigrid-says.com/maintainability/tudelft/ardupilot/components ↩

-

Sigrid manual - https://sigrid-says.com/softwaremonitor/tudelft-ardupilot/docs/Sigrid_User_Manual_20191224.pdf ↩

-

Test Code Quality and Its Relation to Issue Handling Performance- http://resolver.tudelft.nl/uuid:3b6e5a90-d338-4c78-8c84-9c78598568bf ↩

-

SIG/TÜViT Evaluation Criteria Trusted Product Maintainability - SIG ↩

-

https://firmware.ardupilot.org/coverage/index.html ↩