In the previous blog, we zoomed into spaCy through the lens of the architectural views as described by Kruchten1. After discussing the product vision and the architecture, it is time to take a look at the quality safeguards and the architectural integrity of the underlying system.

Which process is used to ensure quality?

spaCy depends on the community to find bugs or missing functions. Once someone finds a bug or a possible enhancement, they can raise an issue on github in order to notify the maintainers of the bug and try to fix it themselves. spaCy asks their contributors to follow certain steps when contributing 2.

When noticing a bug, first check if it has already been reported. If it has, they “better” leave a comment and continue on that issue, instead of creating a new one. The next thing is to create a test issue, test for the bug and making sure the test fails. The latter seems rather counterintuitive at first. When thinking about it though, the only way to report a bug is to show the bug is there. When the test would pass, the bug would not be present. Once the test fails, add and commit the test file to the issue. Once the bug is fixed, and the test passes, reference the issue in the commit message. 3

A bot automatically checks if the contributor has signed the spaCy Contributer Agreement(SCA) which makes sure spaCy may use the contribution across the whole project.

Continuous Integration (CI) Pipeplines: Checks and Config

spaCy uses Azure pipelines to maintain the checks and configurations to be tested during CI. There are two kinds of checks done4:

Validate Check: These checks use flake8 on Ubuntu 16.04 and python 3.7 to check code style. They check for errors:

- F821 undefined variable,

- F822 undefined variable in

__all__ - F823 local variable name referenced before assignment

Module and Functionality Tests:

spaCy is tested to run on the following operating systems:

- Windows: Windows Server 2016 with Visual Studio 2017 - python3.5, python3.6, python3.8

- Linux: ubuntu-16.04 - python3.5, python3.6, python3.8

- MacOS: macos-10.13 - python3.6, python 3.8

All of the above test environments have a 64 bit architecture. They stopped testing on python3.7 temporarily to speed up builds, to this date, testing has not been resumed.5

How are checks carried out?

SpaCy uses the pytest testing framework6. All tests are collected in a single folder test whose structure is similar to the source, except for the fact that the file names are named in the pattern test_[module]_[tested_behaviour]. After looking through many of the tests, we found that this is not strictly followed and a majority of the tests are named test_[module] and each behaviour is tested as part of a separate test inside the file.

SpaCy has detailed guidelines around writing tests6, some of the important ones are:

- Tests that are extensive and take too long to complete are marked as slow tests using the

@pytest.mark.slowdecorator. Pytest allows to skip all slow tests. - The organization of test folders means that we can run all tests for a specific module/directory, if we need it.

- Regression tests are required to be added when a bug corresponding to an issue is fixed. This ensures that future changes will not result in the same bug re-surfacing.

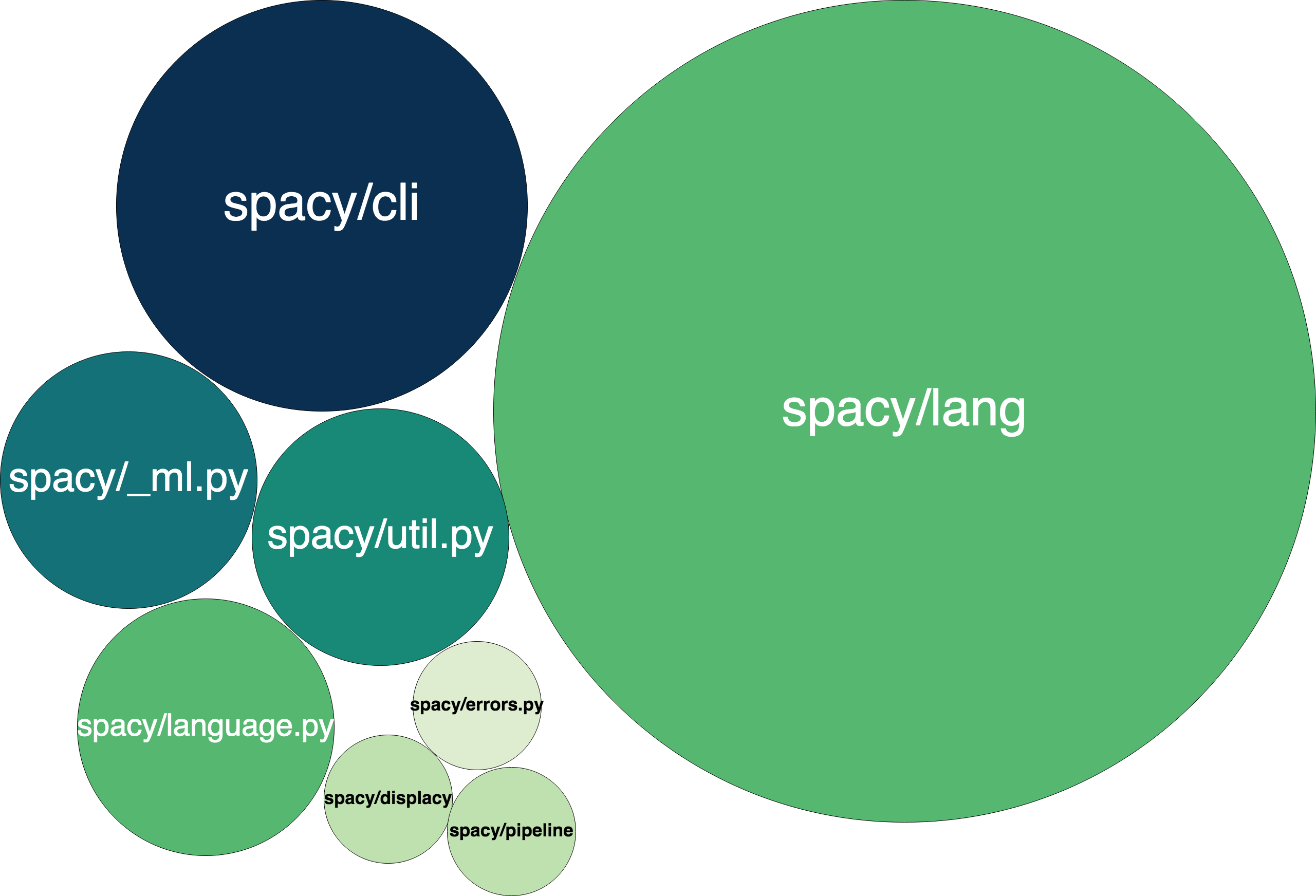

Currently, spaCy does not have an automated code-coverage pipeline setup or any code coverage documentation at all. According to our estimates using python library coverage7, SpaCy has 63% line coverage and 58% branch coverage, with spacy/cli module having the lowest coverage of 19%. We make an overview of this below:

Sidenote: While making this infographic, we realized that there is no good open-source visualization tool that can present an overview of module-wise code coverage so this might be an interesting opportunity.

We state the following for reproducibility: To get these numbers, we ran coverage on all files in spacy/, excluding spacy/tests/*(the tests themselves), checking both basic and slow tests. Specifically, we ran coverage run --branch --source=spacy --omit=spacy/tests/* -m py.test spacy --slow.

Based on our limited analysis, a major portion of the low coverage in spacy/lang is due to example code. This would better belong in the documentation and improve the code coverage metrics.

In many cases, coverage numbers were low because of literal definitions as in this file. We did find that spaCy gives a list of Dos and Don’ts in order to keep the behaviour of tests consistent and predictable. This list gives a summation of the basic conventions which need to be followed as much as possible8.

The current coverage is only satisfactory and could be improved.

Where are most of the contributors?

To find possible coding hotspots, we decided to take a look at the issue page once again. We looked at all open issues and then zoomed in further with two labels. First we took a look at the most recently updated issues, but this returned mostly bugs which needed to be fixed and were therefore opened less than a day ago. Therefore we decided to take a look at the pinned issues. These issues were opened in december 2018 by ines, one of the maintainers for spaCy. These issues are for the model adding for new languages and making inaccurate pre-trained models more predictable. These pinned issues are still very frequently used, with one of the latest mentions being only 4 hours ago ( at the time of writing). One other issue which is 3rd in line of most commented, is the issue to add example sentences in different languages, for the training of models.

Reducing Technical Debt? How-To…

An assessment for quality and maintainability does not necessarily have to be done manually. There are multiple tools available on the market for creating an analysis and providing refactoring suggestions. For this essay, we will discuss the output of the tools Sigrid and SonarQube. While Sigrid 9 is a commercial tool, SonarQube is open-source in it’s most basic variant. For open-source projects like spaCy, it’s available for free as a web-app at sonarcloud.io.

Analysis by Sigrid and SonarQube

While Sigrid and SonarQube both provide an analysis for maintainability, they are different in multiple manners. Sigrid subdivides maintainability as can be seen in the second table and gives a lower general rating than SonarQube. In addition to maintainability, SonarQube also provides a rating on reliability and security - all of this ratings are based on number of bugs, number of “code smells” and number of “security hotspots” found by SonarQube’s analyzer. In the following sections we are discussing some of the suggestions by Sigrid and SonarQube.

| Analysis | Rating by Sigrid (0.5 to 5.5 (best)) | Rating by SonarQube 10 |

|---|---|---|

| Reliability | 1 | |

| Security | 5 | |

| Maintainability | 2.8 (see below) | 5 |

| Analysis | Maintainability ratings by Sigrid |

|---|---|

| Volume | 4.1 |

| Duplication | 2.9 |

| Unit size | 0.5 |

| Unit complexity | 2.7 |

| Unit interfacing | 2.7 |

| Module coupling | 4.9 |

| Component balance | 2.0 |

| Component independence | 2.2 |

| Component entanglement | 5.5 |

Duplications

Both tools provide a list of duplicated code-blocks. Duplicated code can be seen as technical debt - should a developer for example want to improve the implementation of a specific function, he/she has to change it at multiple locations and might not be aware of that fact.

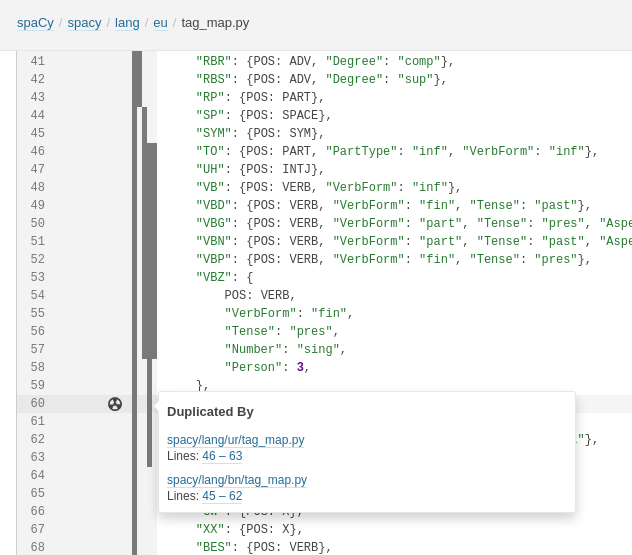

Sigrid shows 100 cases of duplicated code, consisting of 10 to 100 lines of code, sometimes with up to 20 occurences11. Most of them are between files with the same filename (in different folders for different languages).

SonarQube provides a better user experience for showing duplicated code than Sigrid, however we have not found any code with it that should be refactored. None of the duplications within the lang-module are duplicated for all languages.

Many of these duplications are in the language-specific part of spaCy. Duplicated code barely contains any algorithms, but mostly just a list of parameters. Unless there is a complete architectural overhaul we consider it unlikely that someone touches code for multiple languages at the same time.

Unit Size, Complexity and Interfacing by Sigrid

Sigrid analyzes files and functions/methods according to lines of code, McCabe complexity and number of parameters.

Many of the suggestions by Sigrid are for files like tag_map.py, morph_rules.py, tokenizer_exceptions.py and norm_exceptions.py, obviously containing rules and exceptions for different languages. Some of them are huge - e.g. _tokenizer_exceptions_list.py with over 15 kLOC.

The most complex files in spaCy seem to be model.js, universe.js and Scorer.score.

Sigrid also found the ~400 LOC function debug_data. It seems to contain debug code for printing and might be split up into different parts for ner, textcat, parser and tagger. This is not related to the big-picture architecture of spaCy, nevertheless our team intends to take a closer look.

Sigrid suggests to avoid functions with many parameters (up to 35 is spaCy for train.py). As most of these parameters have default values associated with them, they need not be specified at invocation and we consider those issues unimportant.

Module Coupling, Component Independence and Entanglement by Sigrid

The results for this analyses by Sigrid heavily depends on the compartmentisation that was initially done when importing the project in Sigrid (see previous blog posts). While not necessarily being representative, they still provide some further insights.

Sigrid gives component entanglement the best rating possible, however finds some issues with module coupling and component independence. It detects that util.py, util.js, errors.py are heavily used by different modules, which seems pretty obvious for us humans. However there are also the files _ml.py and compat.py which are used by multiple components. This is not not clear from the naming of those files.

Results

Both evaluated tools provide new insight for the spaCy codebase, even thought some of their functionality like analyzing coding activity was not tested. With the help of Sonarqube we have found a small duplicated if-else branch that we are now trying to fix, Sigrid suggested to change some debug-print-code. We are currently taking a closer look into whether splitting up this functionality into corresponding modules makes sense.

I wish to contribute… where do I sign?

The approach towards integrating new features (as employed by the team behind spaCy) is well documented in the contributing.md file on github. This page gives an overview of how the spaCy project is organised and how developers, can get involved. They make a clear distinction between bugs-fixes and feature contributions. Alongwith Matthew Honnibal and Ines Montani (co-founders, ExplosionAI) this repository is maintained by a couple of core contributors namely, Sofie Van Landeghem and Adriane Boyd. Issues are to be submitted based on a custom issue template. The issues are well categorized with carefully curated labels. The team reviews these annotations from time to time, suggesting or adding missing labels to submitted issues as we found out first-hand! There are clear directives and distinctions (of what constitutes spaCy or is a third party dependency) towards opening an issue or making a pull request, which should be followed. A PR needs to be made from a forked repository which additionally requires building spaCy from source using a pre-provided requirements file that helps ensure the added changes do not break the source code.

The proposed code is required to follow PEP8 style-guide for python. Additional linting and formatting modules (used by spaCy v2.1.0) like flake8 and black are bundled while building spaCy from source. There is an exhaustive documentation available about the code conventions that need to be followed in both Python and Cython related code. In case of a feature development type contributions, individual unit-tests are expected to be made in the pull request in addition to updating the documentation of the related feature.

Making ourselves useful!

As mentioned in our anaylsis of spaCy’s product roadmap, the team maintains a separate ‘plugins and project ideas’ thread as a proxy for pinning issues related to new feature development. To this end we have proposed that spaCy maintains a separate roadmap for clarity. It has been categorised as a ‘good first issue’ by Sofie, which seems to be promising!12

-

4+1 Architectural Views, https://en.wikipedia.org/wiki/4%2B1_architectural_view_model ↩

-

Contributing, https://github.com/explosion/spaCy/blob/master/CONTRIBUTING.md ↩

-

Fixing Bugs, https://github.com/explosion/spaCy/blob/master/CONTRIBUTING.md#fixing-bugs ↩

-

Azure .yaml file, https://github.com/explosion/spaCy/blob/master/azure-pipelines.yml ↩

-

Don’t test o 3.7 for now to speed up builds, https://github.com/explosion/spaCy/commit/e232356f413f29c2cf9c2ddc86f623bdc05a0ade ↩

-

spaCy Tests, https://github.com/explosion/spaCy/tree/master/spacy/tests#spacy-tests ↩ ↩2

-

Coverage.py, https://coverage.readthedocs.io/en/coverage-5.0.4/ ↩

-

Dos and Dont’s , https://gitlab.com/connectio/spaCy/-/blob/master/spacy/tests/README.md#dos-and-donts ↩

-

sigrid says, https://sigrid-says.com ↩

-

Sigrid uses a star-rating reaching from 0.5 stars to 5.5 stars (best). SonarQube uses a rating from A (best) to E. In the comparison we map letter A to 5 stars down to 1 star for letter E. ↩

-

https://sigrid-says.com/maintainability/tudelft/spacy/refactoring-candidates/duplication/7251141 ↩

-

Good First Issue list, https://github.com/explosion/spaCy/contribute ↩