Testing is the key to success. Quality is never an accident. All code is guilty, until proven innocent. Folk knowledge in Software Engineering is full of sayings like these ones. Nevertheless, we still live in a world where code quality is most of the times undervalued. The importance of testing, refactoring and evaluating technical debt should however not be neglected, since they are of major importance to safeguard code quality and architectural integrity.

The fact that open-source projects live from community contributions, as also reinforced by the core team member Bryan Van de Ven in a podcast, makes things harder.

there’s a lot of things to do and presently, not enough people to do them,

If, on one hand, it is evident that contributing for new features in open source projects is essential (check our roadmap in essay 1 for more information about those juicy features!), on the other hand, it is important to follow contribution guidelines and templates, and it is even more fundamental to adopt good Testing and Code Quality practices.

Bokeh and its quality processes

Quality is a must. Bokeh is no exception. In order to enforce this quality, only a hand-full (the core team) of developers are allowed to merge new code to the master branch, which means that every line of code is evaluated and checked whether it follows the core team guidelines. This helps the software to maintain a certain level and a coherent code style.

The core-team is also very active within the community, discussing all the issues and searching for the best solutions. These constant interactions allows trust to be built between the community and the core-team, and trust generates confidence and promotes better discussions, which in turn leads to the best possible outcome. Code cannot be separated from the people making it.

As Sara Mei’s said in her keynote on “livable code” at RailsConf 20181:

“Build trust among team members and strengthen their connection. The code base will follow”

Bokeh, besides having the core-team as the quality judges, also uses LGTM, which is an awesome automated tool that evaluates code quality, has an unparalleled security analysis, automates code review and executes a deep semantic code search. With this tool, Bokeh prevents bugs from being merged but also grades its code quality comparatively to other projects.

Oh, did you think that was it? No. There is more!

Bokeh uses Github Continuous Integration, which means that they continuously integrate! What are they integrating you ask? Code, Tests, Deployment! Every time someone pushes something to the master branch at Github, the CI is activated and everything is tested again.2

This way, Bokeh can assure code quality.

Bokeh never stops: a look into the CI processes

Github CI, or Github Actions as they named it, automates Bokeh’s workflow, easing the development process. This tool automatically builds to Windows, Ubuntu, macOS but also runs a plethora of tests making sure that almost nothing is broken!

Every push to the master branch or any Pull Request branch on GitHub automatically triggers a full test build on the Github Continuous Integration service.2 You can check a list of the current and previous builds here.

Just a small glimpse of whats going on behind the curtains:

Figure: Github CI test checks

Testing Bokeh, the importance of tests and how to build a better future

It is fair to say that testing is not an easy job. In fact, Bryan stated in a recent interview conducted by us that testing is one of the hardest areas on the project.

[…] one of the hardest areas is simply the enormous test surface for a project like Bokeh. If you look at the entire matrix of Python version x OS version x Browser version x Jupyter version x Tornado version x… It’s just vastly more than we have resources to run real tests for. Things continue to improve as we are able to, but it’s an ongoing challenge.

It is not surprising to say then that Bokeh relies heavily on user input, as shown in the figure below. Users contribute mainly through visual and manual testing, reporting the issues they find while running their applications.345

Figure: Label tag

However, a large and multi-language system like Bokeh cannot rely only on those kinds of tests to maintain capability and prevent regressions. For the past months the team has been asking for improvements in their automated testing process and encouraging contributions from the community, as shown in the figure below. The developer guide dedicates a whole page to testing. The core team mentality about the importance of tests is clearly stated there6:

In order to help keep Bokeh maintainable, all Pull Requests that touch code should normally be accompanied by relevant tests […] a Pull Request without tests may not be merged.

Figure: Bokeh encourages community participation

Besides the “no test, no merge” policy for new features, the team is also concerned with the quality of tests7 even though it is not a priority.

Recently, all the test files were aggregated in a single tests directory.8 The tests directory consists of Python unit tests, JavaScript unit tests, browser integration tests, codebase tests and example tests, grouping 41.7k lines of code, corresponding to 30.0% of the number of code lines in the project, 139.1k9. For instance, the example tests, among other things, check for image differences and can take 30 minutes to run.10

Unfortunately, Bokeh doesn’t offer information regarding code coverage and the matter is not covered in recent issues nor Pull Requests.

Bokeh’s hot activity

We used CodeScene to figure out the hotspots of Bokeh. Our guess was that the most important components were constantly changing, refactored or having its bugs fixed. And we were right! The hottest spot of Bokeh is document.py which is a core component of Bokeh’s architecture: this Python file describes how a document works, which is “nothing” more than a collection of widgets and plots that can be served to users. document.py reigns on top but figure.py, annotations.py, tools.py and some other components also have a quite nice activity going on, and again, all of those are core components to Bokeh since combined they are what defines a Bokeh’s plot.

This shows that Bokeh is active and looking forward to get better each day focusing on what it really matters!

Figure: Hotspots: Red means hot!

We also noticed that there were several modules within BokehJS that have some activity going on, which makes perfect sense because, as you will see in the next section, BokehJS is the component that will suffer the most changes in the future.

From the architecture to the roadmap

There is always room for improvement and, as we mentioned in the roadmap of our first blog post, Bokeh has a great plan for the future.

The developers are highly concerned about improving BokehJS1112, as they want to develop BokehJS as a first-class JavaScript library. The core team expects this to attract a wider pool of contributors interested in maintaining the project13 and helping with the development of new features, such as better animations, transitions and events.

We expect that in the next months BokehJS module gets a lot of activity, since, as shown from the references above, the code is not yet ready for all the changes.

A look into Bokeh’s code quality

We already know that Bokeh has two independent parts that work together to create awesome stuff: Bokeh and BokehJS. In order to get started with this section, we must look into the structure and code distribution of both, along with how the code is maintained and finally describe the quality of the code regarding these concepts.

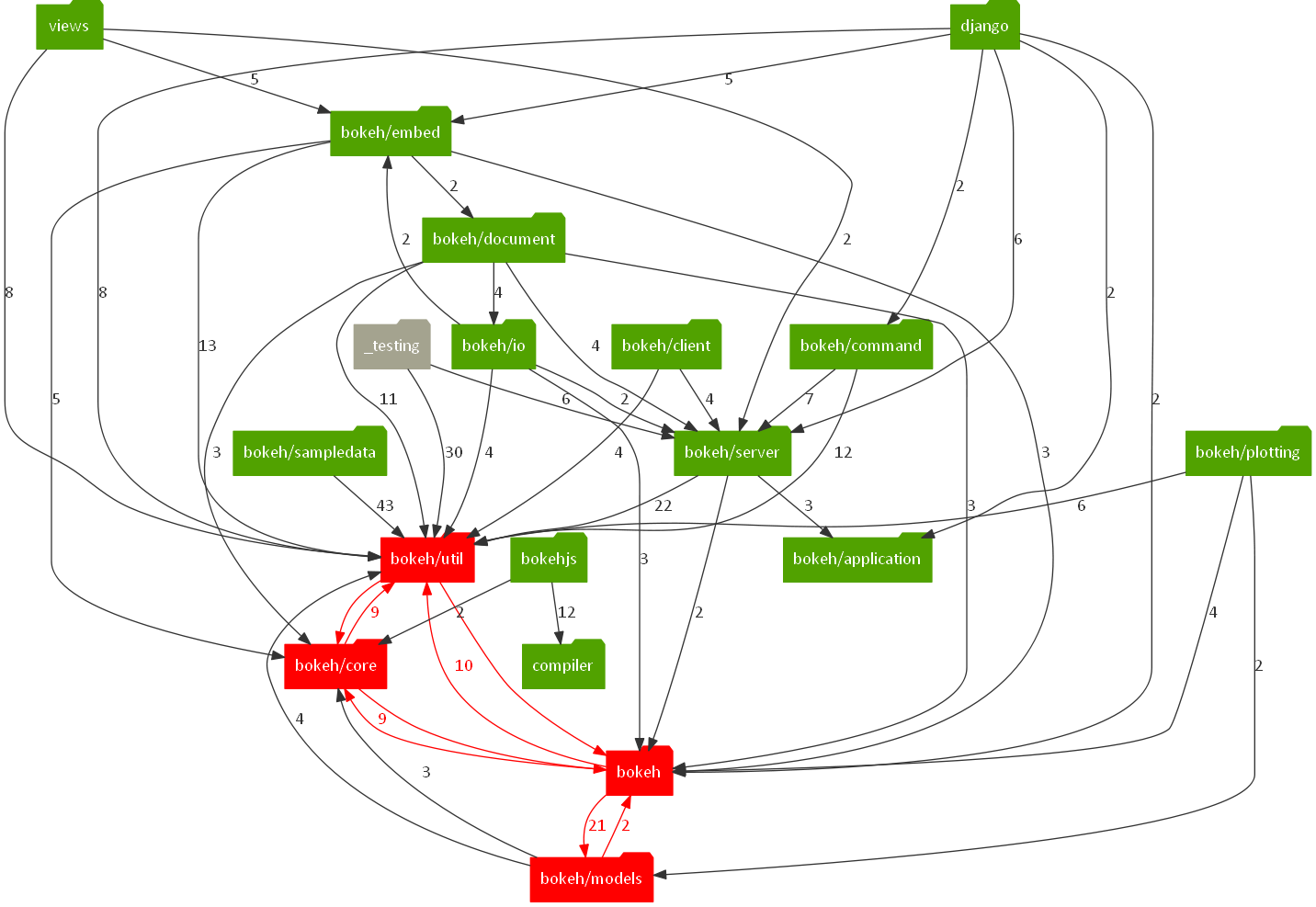

First off, we start with a dependency graph of the main components in Bokeh repository:

Figure: Bokeh's main modules dependency graph

With this we can discuss one of the concepts regarding code quality and maintainability: cyclic dependencies. These occur when two modules/components depend on each other to work, that is, there are calls from module 1 inside module 2 and vice-versa. In the previous graph representation, we have identified 4 modules that suffer from this (colored in red). Perhaps the most serious case would be the bokeh module (Python side), that has cyclic dependencies with 3 different modules, so a change into this module may trigger errors in the modules bokeh/util, bokeh/core, bokeh/models, and also a change in any of these 3 may trigger an error in bokeh as well.

Another important issue to address is code recycling. This refers to when a chunk of code is present in many modules, and in order to give more consistency and avoid code repetition, a new module is created where that chunk is called from whenever needed. A very recent issue has been brought up regarding this in the BokehJS module, and Bokeh team is trying to improve this for future releases. Here one can appreciate that the team is concerned about their code quality, imposing code changes to improve it whenever possible. Other examples related to this are the introduction of global terminologies to help achieve an organizational standard, and further resources redistribution to make bokeh more managable, as can be seen in this issue.

Another interesting metric is how many Lines of Code (LOC) are destined for testing. The following statistics are taken from LGMT:

Code density from root (/):

| Component | LOC (amount) | LOC (aprox %) |

|---|---|---|

| bokeh | 40.8k | 29.3% |

| bokehjs/test | 15.6k | 11.2% |

| bokehjs/< others > | 39.7k | 28.5% |

| conda.recipe | 2 | ~0% |

| docker-tools | 30 | ~0% |

| examples | 11.7k | 8.4% |

| scripts | 1.4k | 1% |

| sphinx | 3.4k | 2.4% |

| tests | 26.1k | 18.8% |

| total | 139187 | 100% |

disclaimer: the test module is Python related and bokehjs/test is JS related

This gives us a total of 41.7k LOC (30%) of code destined only for testing, which reflects the efforts of Bokeh’s team to have a well tested developing environment and stable releases.

LGMT also provides a comparison tool to check how Bokeh performs in terms of code quality to similar Python and JavaScript projects. In both cases Bokeh was graded with an A+, being on top of similar projects, such as Ansible (this project is also being studyied in this course: you can learn more about Ansible here).

Refactoring Bokeh

In order to get more insight about where should refactoring be made, we used SonarQube, and the results were rather surprising.

SonarQube identifies 3 types of refactoring candidates (Bugs, Vulnerability and Code Smell), and separates them in 4 different severity levels (Blocker, Critical, Major and Minor).

Bugs

Here we have detected 48 different bugs that could use refactoring in order to improve code quality. Most of them are suggestions, and not many of them apply, but some are really amazing. The most severe (blocker) case found (twice) is in the following code piece:

Figure: Remove return Bug

“By contract, every Python function returns something, even if it’s the None value, which can be returned implicitly by omitting the return statement, or explicitly. The init method is required to return None. A TypeError will be raised if the init method either yields or returns any expression other than None. Returning some expression that evaluates to None will not raise an error, but is considered bad practice.”

The solution is as simple as to remove the return word and code should work.

Vulnerability

This refers to any security vulnerability that the code may have. Here we only have 5 issues/warnings, of which 4 are the same.

-

Phishing (Blocker)

Figure: Noreferrer Bug

“When a link opens a URL in a new tab with target=”_blank”, it is very simple for the opened page to change the location of the original page because the JavaScript variable window.opener is not null and thus “window.opener.location can be set by the opened page. This exposes the user to very simple phishing attacks”

The solution, again is very simple, we only need to add

rel="noopener noreferrer"to the a tag. (noopenerfor newer browsers andnoreferrerfor older) -

Jinja Autoescaping

Figure: Autoescaping Bug

Escaping HTML from template variables prevents switching into any execution context, like < script >. Disabling auto escaping forces developers to manually escape each template variable for the application to be safe. A successful exploitation of a cross-site-scripting vulnerability by an attacker allow him to execute malicious JavaScript code in a user’s web browser. The most severe XSS attacks involve:

- Forced redirection

- Modify presentation of content

- User accounts takeover after disclosure of sensitive information like session cookies or passwords

The solution? just add the

autoescape=Truekwarg to theEnviromentinstantiation. Alternatively, one could specify where the html would be able to auto escape.

Code smells

These are mostly not-so-good coding practices that could be easily improved. Most of them correspond to cognitive complexity, and aim to reduce it by further modularization or code simplification. One example of this would be:

Figure: Curly Braces Bug

“In the absence of enclosing curly braces, the line immediately after a conditional is the one that is conditionally executed. By both convention and good practice, such lines are indented. In the absence of both curly braces and indentation the intent of the original programmer is entirely unclear and perhaps not actually what is executed. Additionally, such code is highly likely to be confusing to maintainers.”

The solution is very straightforward: add curly braces ({})

The most severe (Blocker) issues, however, are:

-

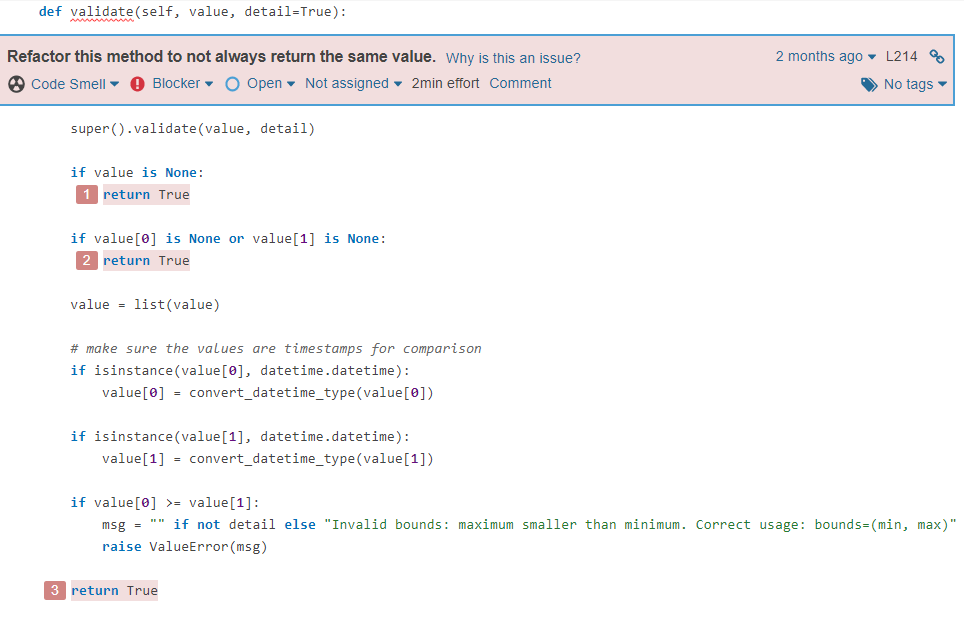

Same Value return

Functions are meant to return different values according to the information they process (There’s no point on having a function that always returns the same value). In

bokeh/core/property/visual.pywe see the following code:Figure: Same Return Bug

As we see into this issue, we can realize that the return value doesn’t really matter, but the goal here is to whether the function raises an exception and the return value is unnecessary. So we will not take into account SonarQube suggestion, but rather propose our own solution: the most logic option would be to replace all of this with a

try/exceptexpression, and change the 2nd and 3rdifwithelif, that way no explicit return value is required and if the conditions are satisfied, the Error will rise anyways. -

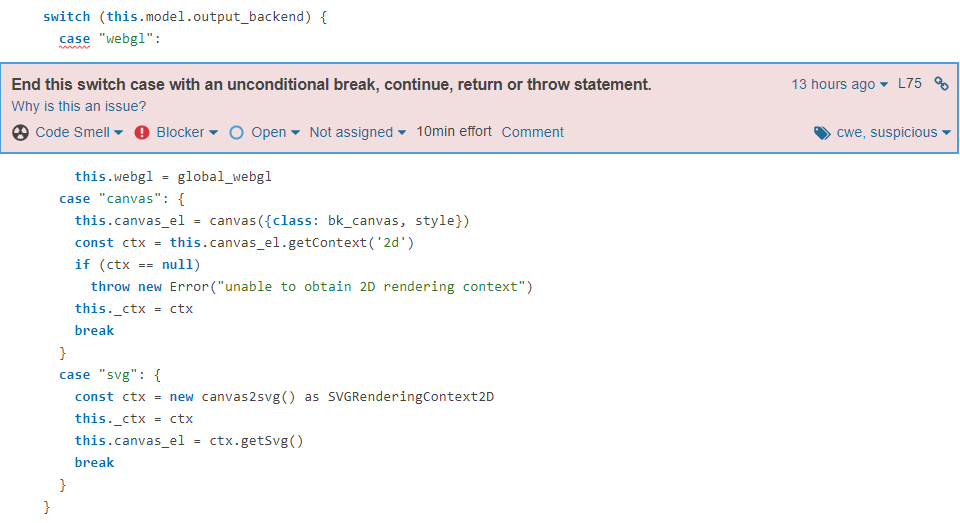

Switch case break

Figure: Switch Case Bug

When the execution is not explicitly terminated at the end of a switch case, it continues to execute the statements of the following case. While this is sometimes intentional, it often is a mistake which leads to unexpected behavior.

In this case it clearly is not intentional, as the two cases are completely different, not related strings. So the correct thing would be to add a

breakstatement right afterthis.webgl = global_webgl

With all of this said, we can see that there is still room for refactoring. Some issues may not have any substantial effect on the execution itself, but that doesn’t mean they don’t improve the code quality. It’s good to see, however, that the issues we have detected are not that serious, and in most cases really easy to solve!

Is it time to pay… the technical debt?

Technical debt is a metaphor coined by Ward Cunningham that reflects the cost of additional rework by choosing a faster solution now, neglecting internal quality, instead of a better one that will imply more work.14

Pull Request #9732 gives an interesting overview on how technical debt works. In this PR a contributor suggested a new feature. One member of the core team commented that the code being changed was originally written without much care, as can be seen in the figure below.

The file was not refactored since it was originally written, as the extra effort was not worth the gain. Other member of the team realizes that they are paying this technical debt gradually, as more pull requests are opened.

Furthermore, recently Bokeh released version 2.0.0. This allowed the developers to shed some technical debt. Bryan commented on this issue in the interview:

[…] 2.0 was the opposite of difficult: it was a great change to shed a lot of technical debt, dropping python 2 and python 3.5 support, e.g. allowed us to make many things much simpler, discard bothersome or outdated things, etc.

We hope to have convinced you that is it not only the foundations that matter, without maintenance and proper care, even the strongest pillar rots. One needs to safeguard the quality and architectural integrity of any software.

-

https://www.youtube.com/watch?v=lI77oMKr5EY ↩

-

https://docs.bokeh.org/en/latest/docs/dev_guide/testing.html#continuous-integration ↩ ↩2

-

https://github.com/bokeh/bokeh/issues/9724 ↩

-

https://github.com/bokeh/bokeh/issues/9522 ↩

-

https://github.com/bokeh/bokeh/issues/9703 ↩

-

https://docs.bokeh.org/en/latest/docs/dev_guide/testing.html#writing-tests ↩

-

https://github.com/bokeh/bokeh/pull/9729#issuecomment-599622371 ↩

-

https://github.com/bokeh/bokeh/issues/9533#issuecomment-567567036 ↩

-

https://lgtm.com/projects/g/bokeh/bokeh/latest/files/?sort=name&dir=ASC&mode=heatmap&showExcluded=true ↩

-

https://discourse.bokeh.org/t/running-the-tests-locally/4996 ↩

-

https://github.com/bokeh/bokeh/pull/9615 ↩

-

https://github.com/bokeh/bokeh/pull/9808 ↩

-

https://bokeh.org/roadmap/ ↩

-

https://www.martinfowler.com/bliki/TechnicalDebt.html ↩