In our previous blogpost, we discussed about Micronaut’s architectural patterns and its different views. In the third blogpost of this series, we analyze the quality of Micronaut’s codebase and look into the measures taken to ensure its maintainability.

Overview

As other projects analyzed in this course, Micronaut is developed as open-source software. The public availability of the code brings the advantaged of being used and therefore tested by many users. Moreover, it allows the open-source community to effectively peer review and bring new improvements to its codebase.1

With such a large community working together, Micronaut needs a solid CI Process to integrate fixed issues and new functionalities proposed by the community.

The Development Activity section discusses the planned roadmap, the ongoing development activities and the key hotspots. The last section, Quality Assessment, provides an analysis of the maintainability, technical debt and potential refactoring strategies of the most active modules.

CI Process

According to Martin Fowler 2 Continuous Integration is a development practice where individual team members integrate their work frequently and verify the integration with an automated build.

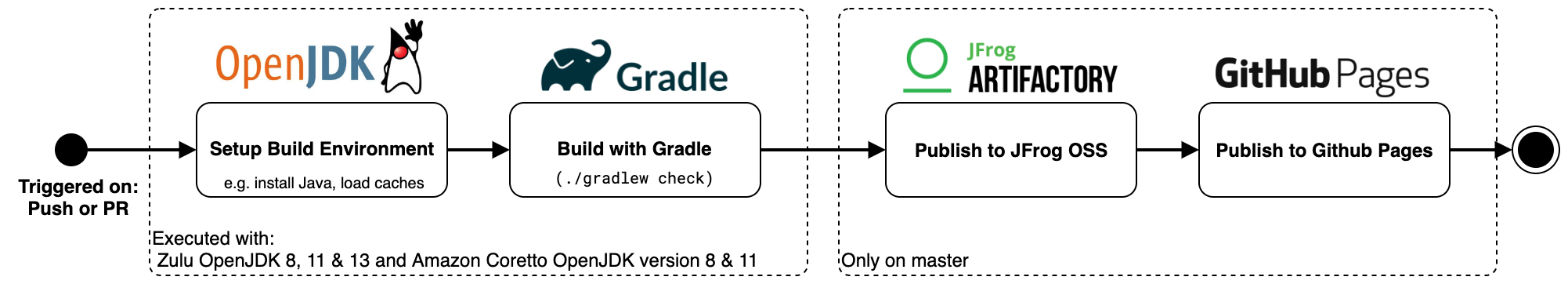

Every new push to the repository and new pull-requests trigger Micronaut’s automatic build, that is defined with Github Actions.

Figure: Microanut's Automated Build Pipeline

As the Figure shows, the most important part is the Gradle Build which is executed on 5 different Java versions. A deeper look in the project’s gradle.build file reveals, that this includes compiling, the execution of tests and a static code analysis with checkstyle.

Afterward, on a master build, the generated jar files are uploaded to JFrog’s OJO, a maven repository for pre-releases. Finally the pre-release documentation is updated.

Besides this automatic build, there is a release-build, a dependency check and performance tests.

Tests

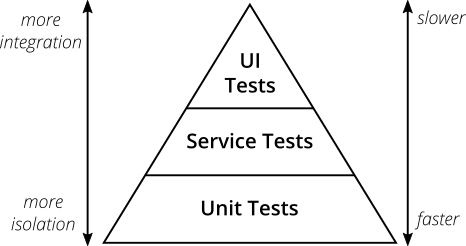

In 2009 Mike Cohn introduced the concept test pyramid which suggests organizing tests in three different layers.3 From a modern point of view, the concept is simplistic and the naming of the layers is not ideal, however, the basic idea of writing tests with different granularity and having few high-level tests, is a good practice for keeping the test suite fast and maintainable. 4

Figure: The Test Pyramid

The Micronaut team writes its tests mainly with Spock, a Groovy testing framework that provides behavior-driven_development (BDD) compatible syntax. Because the tests are not assigned to different granularity levels, we came up with a simple rule to distinguish between them. We categorize all tests which are either annotated with @MicronautTest or contain Application.run(...) into the category class-level integration test because then an application context is created and beans are wired together (see previous blogpost). Therefore, we assume that those tests cover multiple classes at once. For the remaining tests, we assume that they cover one individual class and are categorized as unit tests.

An exception are the test-suite modules. They contain tests written in Java, Kotlin, and Groovy which only access APIs available for the end-user. Therefore, we consider them as end-to-end tests.

We counted the tests per category and measured there execution time on the CI System for the development state at the time of writing.

- 2353

unit tests- 2 min 17sec - 1358

class-level integration tests- 5 min 17 sec - 504

end-to-end tests- 1 min 27

Those results show, that Micronaut’s tests are following the idea of the test pyramid. They have many unit tests that are executed quickly and they have fewer high-level tests that are slower. Furthermore, it proves that our naive classification approach is not groundless and class-level integration tests are slower.

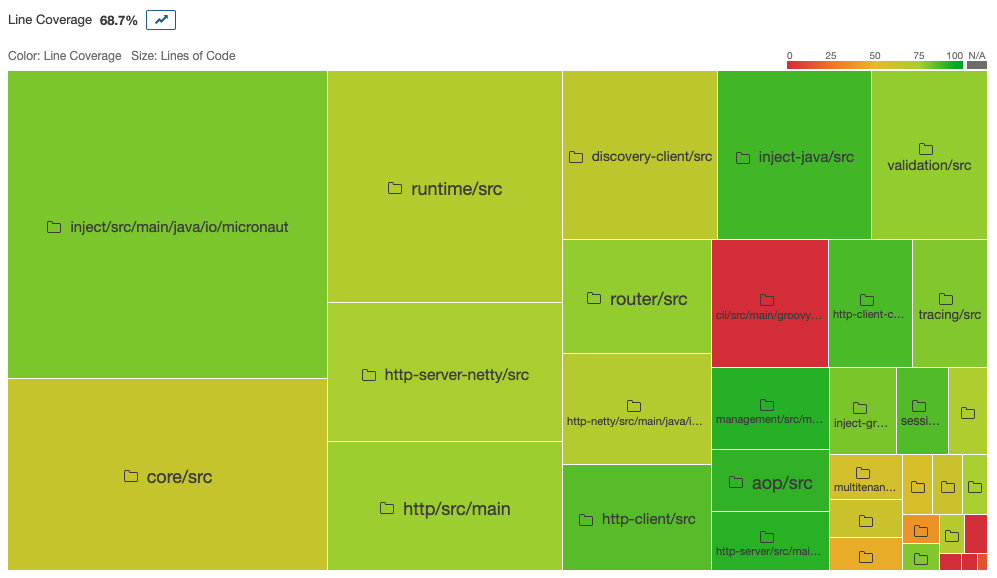

Because Micronaut is not measuring the test coverage, we have added Jacoco to the Gradle build. Even when this broke 4 tests, we could measure a line coverage of 68.7% for the remaining tests. To evaluate the test coverage further, we uploaded the Jacoco data to a self-hosted Sonarqube instance.

Figure: Sonarqube: Line coverage per module

This view illustrates, that the cli module is barely covered. As explained in the previous blogpost, the cli module is an independent application and, therefore, we excluded it from the overall coverage calculation. Without this module, the line coverage increases to 75.5%.

According to Martin Fowler5, the numeric value of test coverage alone provides only little information about the quality of tests. Nevertheless, he expects “a coverage percentage in the upper 80s or 90s” for well-thought tests. Micronaut is not measuring the coverage continuously, but with 75.5% coverage, they are close to this suggestion.

We think the careful selection of testing tools, the different granularity of tests and the reached coverage, demonstrate that tests are taken seriously and are an essential part of the development process and maybe, this mindset is more important, than following a metric blindly.

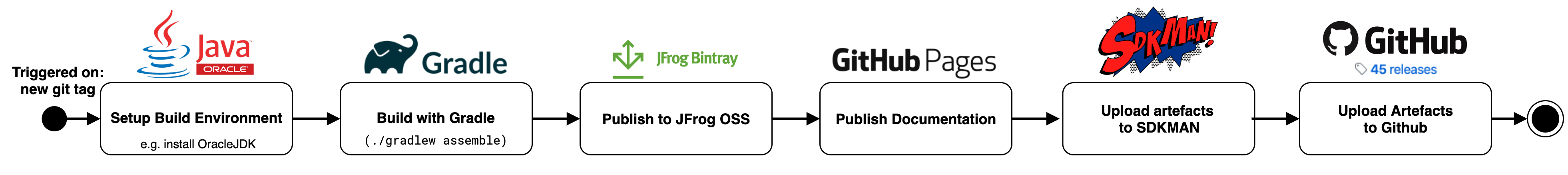

Release

The release build is highly automated. When a new git tag is added the following Travis pipeline is executed.

Figure: Release build

Dependency Check

Additionally to the automatic build, Dependabot checks daily the dependencies for outdated and insecure versions and automatically commits the updated ones.

Figure: Dependabot Commits

Performance Tests

As discussed in the previous blogposts, performance is an important quality criterion for Micronaut and, therefore, it must be measured. Especially interesting is the comparision to competitor framweork which the Team has done in this blogpost.

Development Activity

Analysis of recent activity

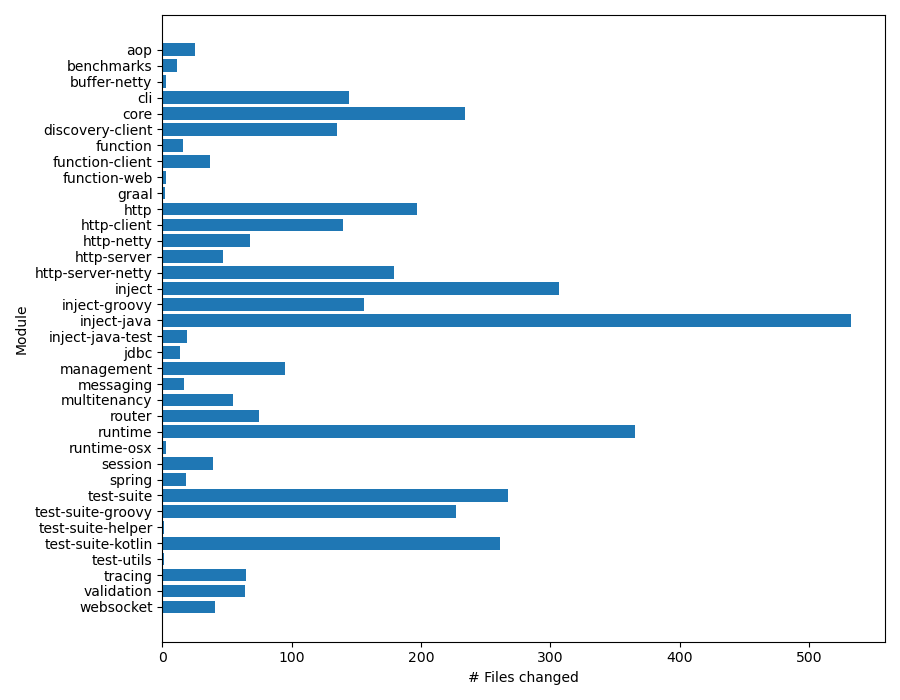

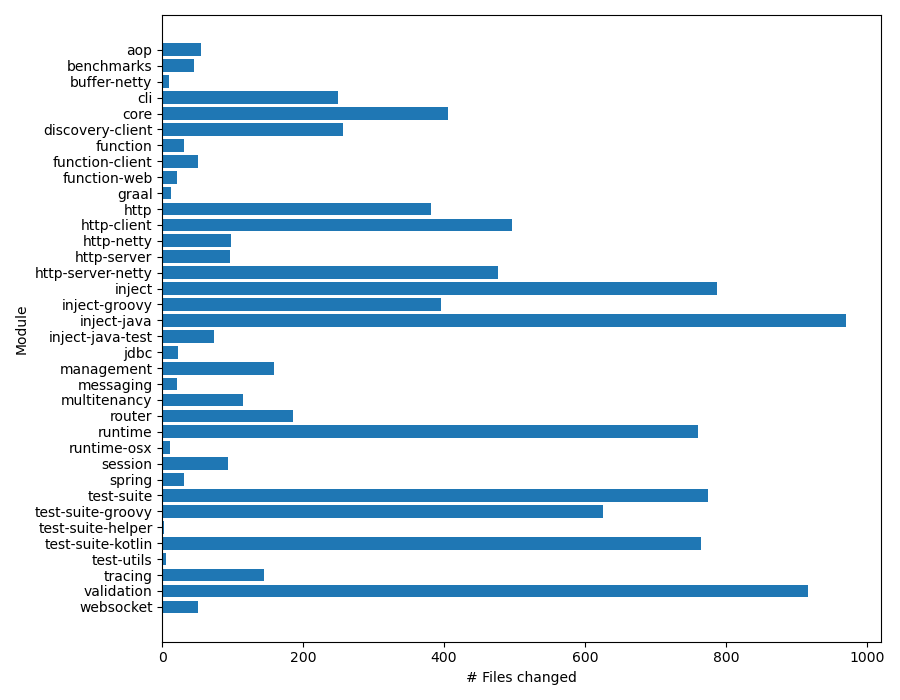

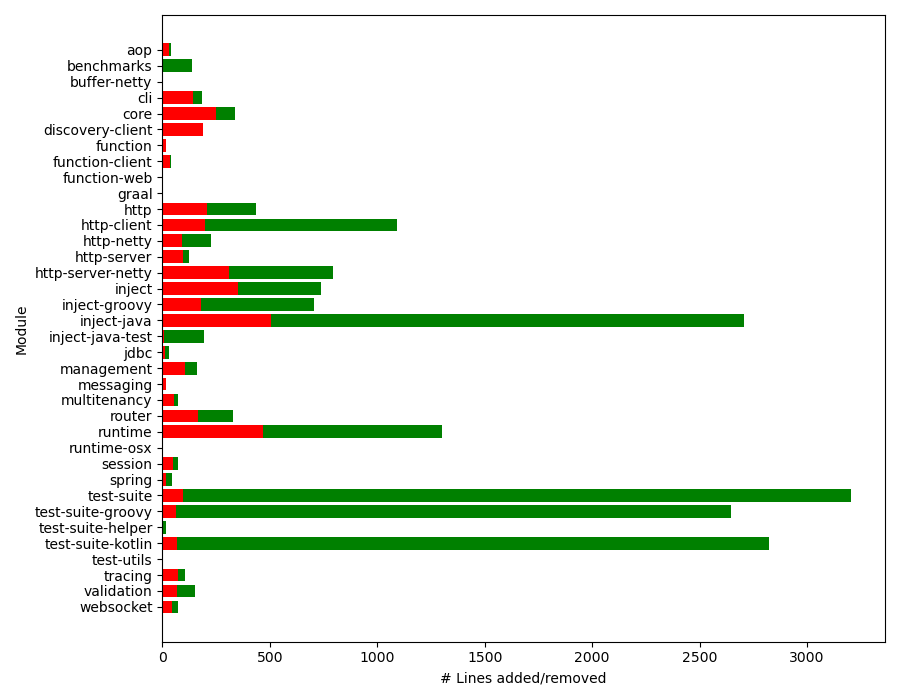

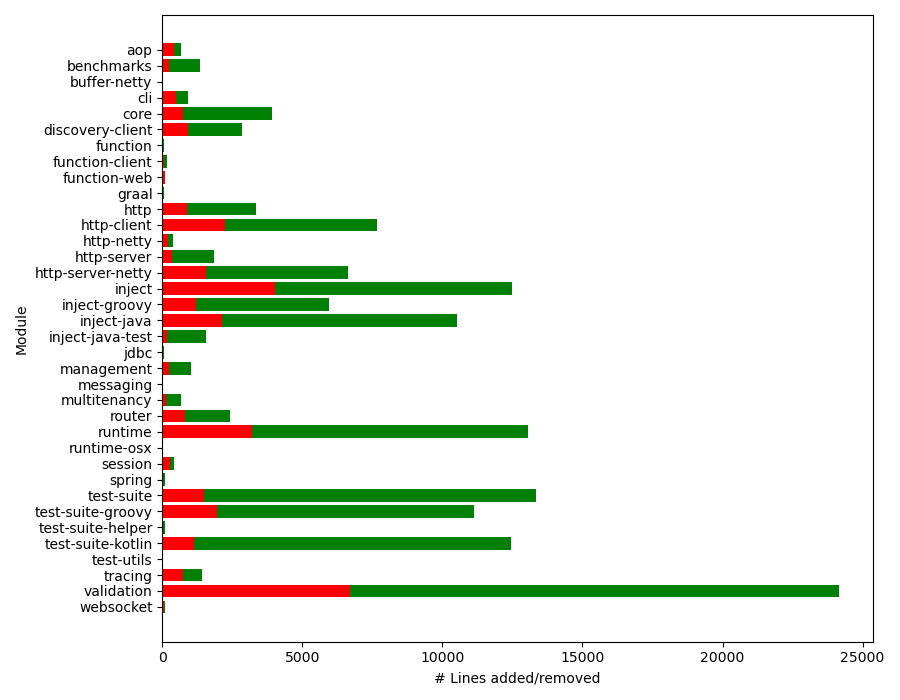

To identify the activity hotspots we analyzed which modules had the most changes in recent coding activity. For that, we measured coding activity in two ways: in terms of the number of files changed, and in terms of the number of lines added and removed.

We use PyDriller6, a Python framework to traverse commits in a certain period of time. We aggregated the file changes per module for the past three months and compared it to the changes in the last year to see if any hotspots have changed over time.

Figure: The number of file changes per module over the past three months

Looking at the number of file changes per module over the past three months, we see that the inject-java module is by far the most active module, followed by runtime and inject. The rest of the modules have seen significantly less activity.

Figure: The number of file changes per module over the past twelve months

If we compare to the activity of the last year, we see that inject-java. runtime and inject still belong among the most active hotspots. However, they are now accompanied by test-suite, test-suite-kotlin and validation. It is noteworthy that the modules that recently have been the most active are also hotspots over a longer timespan.

Figure: The number of line changes per module over the past three months

Figure: The number of line changes per module over the past twelve months

In contrast, if we consider coding activity from a lines added/removed perspective, the test-suites have been the most active, as well as again the inject-java module. Again comparing to the activity over the past 12 months, the same trend holds, but now with validation being even more active than the aforementioned modules.

Depending on the metrics, we end up with different recent activity hotspots. For file changes, these are inject-java, runtime, and inject, while for line changes the hotspots are test-suite, test-suite-kotlin, inject-java, and test-suite-groovy. However, we can say for certain that inject-java is a hotspot as it is amongst the most active modules for both metrics.

Future activity (Roadmap)

Work on the roadmap has progressed rapidly since our first mention of Micronaut’s roadmap. At the time of writing, a lot of the features from the discussed 2.0.0 milestone have been implemented. Several issues remain in the newly updated 2.0.0.M2 milestone.

We will identify which architectural components are likely to be affected by the upcoming features on this milestone. For each issue, we list the modules that are likely to be affected. We determined this by identifying if there is a specific class or module mentioned in the issue, and otherwise by searching in the code base for code related to this issue. For instance, the issue #1421 concerns the behavior of the bean context, therefore, the class that is probably affected is DefaultBeanContext.java, which belongs to the inject module.

-

#1418 Fail compilation if a concrete class has an introduction advice annotation:

inject-java -

#1421 Throw an exception if the bean context is used after its closed:

inject -

#1859 Don’t generate beans if @inject is present but the type is not declared a bean:

inject-java -

#1969 Remove the use of Jackson for MapToObjectConverter:

runtime -

#2686 Support random available port for management endpoints:

management -

#2732 Refactor server filters to be registered and found more like client filters:

http-server -

#2809 Support RxJava 3:

runtime -

#2811 Support Groovy 3:

inject-groovy,test-suite-groovy -

#2869 Refactor multipart upload route execution:

http-server-netty -

#2953 Specifying –test spock errors create-app in 2.0.0.M1:

cli -

#2959 Can’t introduce own @Client annotation:

http-client -

#2958 Invalid type bound from @ConfigurationProperties:

inject

The modules that occur most often are inject, inject-java, and runtime. It is interesting to see that this is in line with the hotspots in the activity of the last three months, as identified in the previous section.

Quality Assessment

In the above section we concluded that inject, runtime, and inject-java are likely to be affected soon. As explained in our previous blogpost runtime and inject are central modules, as well as the inject-java module which is executed at compile-time and processes the annotations.

We have analyzed the development state at the time of writing using SonarQube, a static code analysis tool which scans the codebase using a preconfigured set of rules, calculates file-level metrics and display them on a final dashboard. The sonarQube evaluation is similar to the checkstyle analysis mentioned in CI process section but contains a larger set of rules.

Technical Debt assessment

Technical debt reflects the extra development cost that arises when code that is easy to implement in the short run is used instead of applying the best overall solution.7 Reducing technical debt in a system helps to improve the maintainability of the source code as well as to reduce the amount of effort required to develop new functionalities.

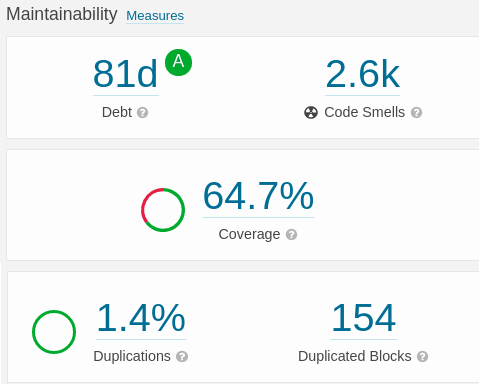

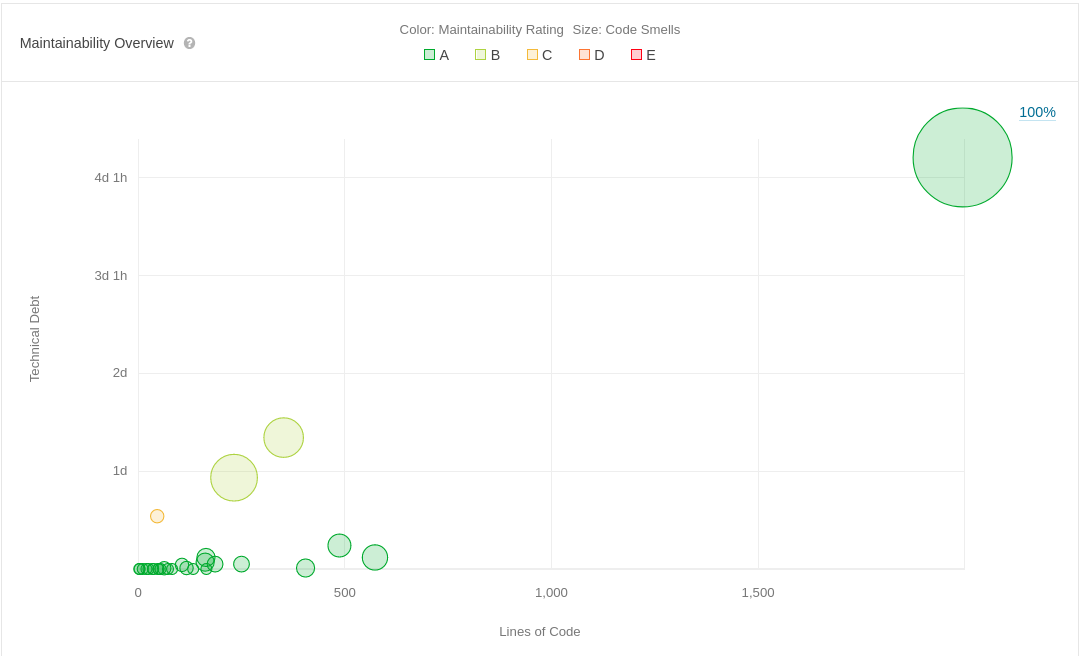

The figure below shows the results from the analysis. SonarQube estimates that it takes 81 days to fix all technical debt. The metric “day” is based on the Software Quality Assesment based on Lifecycle Expectations Metholodgy.8

Figure: Technical debt analysis by SonarQube

SonarQube also gives us some insights about the number of code smells, which is 2.6k, from there 646 are critical and 905 major, however since the set of rules where not customize for Micronaut it might be possible that some of the SonarQube violations are not good predictors of fault-proneness. The number of identical lines of code is about 1.4%, which is almost insignificant. The test coverage of 64.7% was discussed in the previous section.

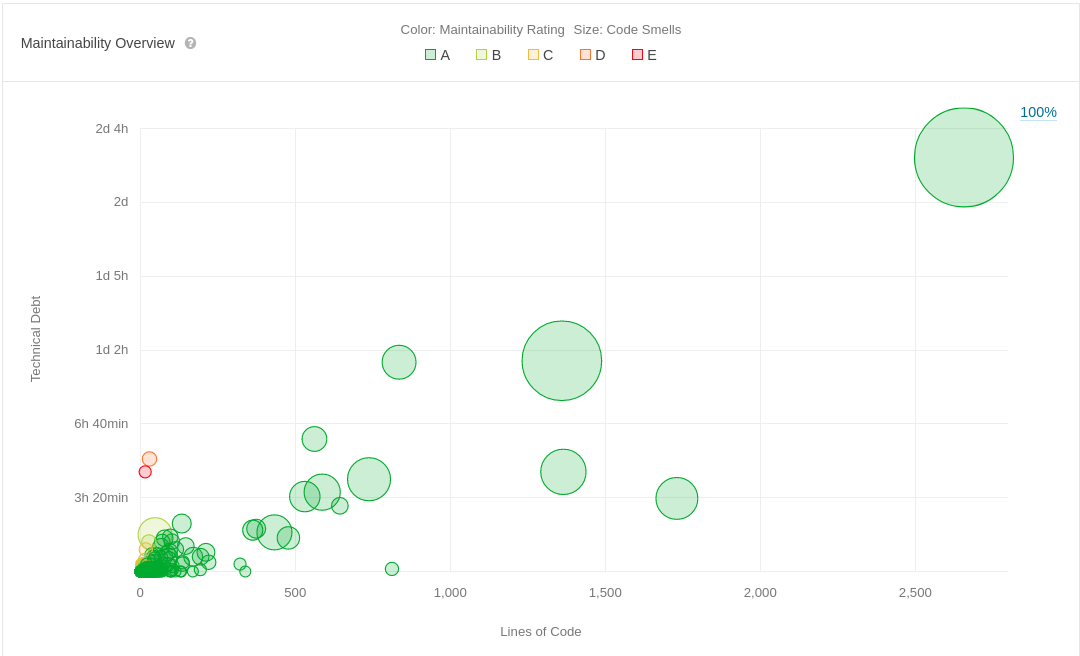

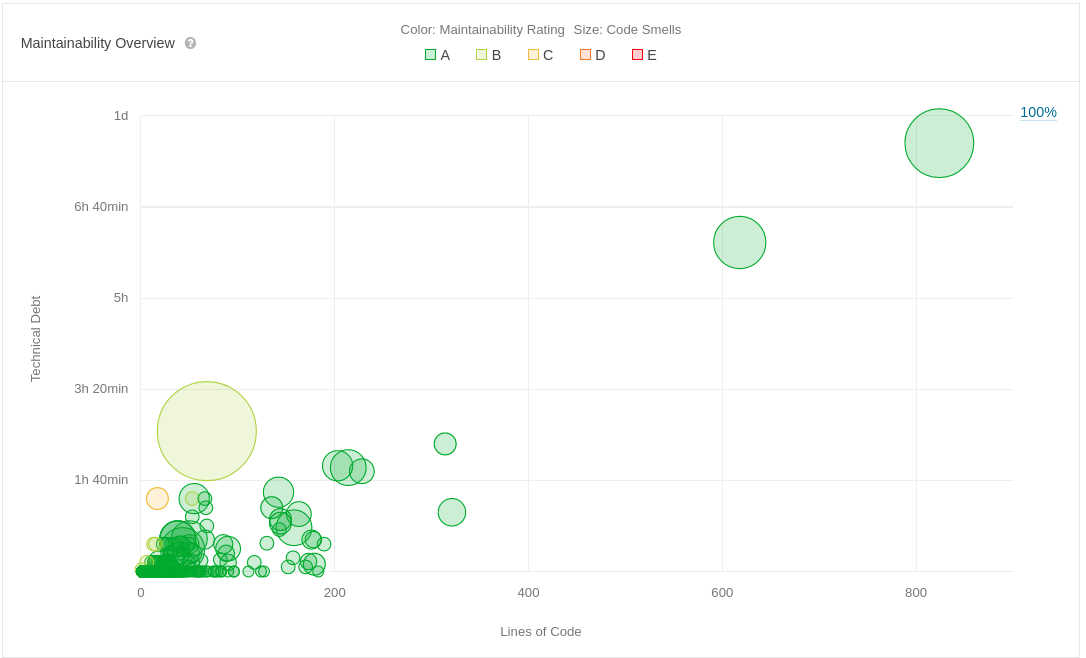

In the inject inject module, which consists of 22346 lines of code, SonarQube has detected 540 code smells and gives an estimation of 14 days to fix them.

Figure: Technical debt for the inject module

In the inject runtime module, which consists of 12367 lines of code, SonarQube has detected 299 code smells and gives an estimation of 7.5 days to fix them.

Figure: Technical debt for the runtime module

In the inject inject-java module, which consists of 5876 lines of code, SonarQube has detected 94 code smells and gives an estimation of 8 days to fix them.

Figure: Technical debt for the inject-java module

Potential Refactoring

For improving the quality of the three modules, inject, runtime and inject-java we suggest to solve first the most recurrent code smells which are:

Reduce the cognitive complexity of methods (117 code smells)

To keep code maintainable, the goal is to keep functions simple and readable so they can be understood intuitively.9 Cognitive complexity is a metric that tries to express if a method is understandable. Therefore, the rule suggests as soon as the complexity is above a certain threshold, the method should be refactored. Good refactoring strategies are: reducing nestings, extracting parts into other methods and using fewer variables.10

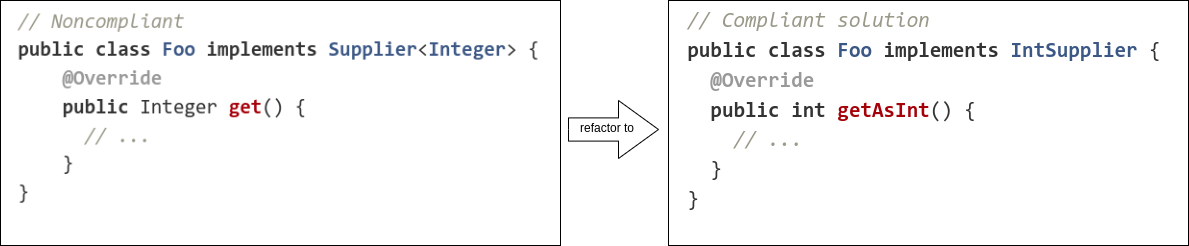

Functional interfaces should be as specialized as possible (84 code smells)

It is important to use the specialized functional interfaces from the Java standard library so that the Java compiler can automatically convert the parameters into primitive types.11 For refactoring it, the generic interface (e.g. Function<Integer,R>) must be replaced with the specific one (IntFunction<R>).

Figure: Functional interfaces should be as specialized as possible

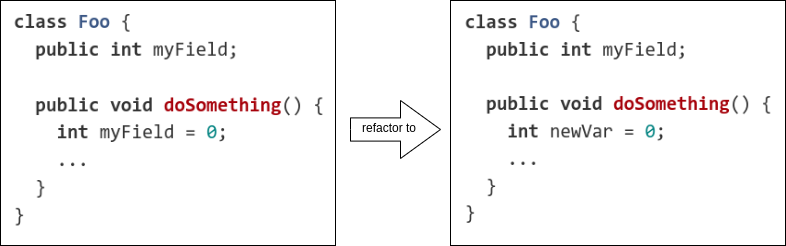

Local variables should not shadow class fields (65 code smells)

When redefining a variable that is already defined in an outer scope it’s hard for the reader to see to which value the variable relates. To avoid this, variables in the inner scope should be given a different name.12

Figure: Rename variable in inner scope to avoid shadowing class fields

In conclusion, the results presented by SonarQube have little room for improvement. This proves that Micronaut CI processes and development mindset have a positive impact on the overall quality.

-

Open Source Software Development. Open Source Software (OSS) Quality Assurance: A Survey Paper ↩

-

Martin Fowler. Continuous Integration. https://martinfowler.com/articles/continuousIntegration.html ↩

-

Mike Cohn. Succeeding with Agile. Addison-Wesley Professional, 2009 https://www.oreilly.com/library/view/succeeding-with-agile/9780321660534/) ↩

-

Ham Vocke. The Practical Test Pyramid. 2018 https://martinfowler.com/articles/practical-test-pyramid.html ↩

-

Martin Fowler. TestCoverage. 2012 https://martinfowler.com/bliki/TestCoverage.html ↩

-

Spadini, Davide and Aniche, Maurício and Bacchelli, Alberto. PyDriller: Python Framework for Mining Software Repositories. https://pydriller.readthedocs.io/ ↩

-

Martin Fowler. Technical Debt. https://martinfowler.com/bliki/TechnicalDebt.html ↩

-

Jean-Louis Letouzey. The SQALE Method for Evaluating Technical Debt. In 3rd International Workshop on Managing Technical Debt. Zurich, Switzerland. 2012. ↩

-

KISS principle. Wikipedia https://thevaluable.dev/kiss-principle-explained/ ↩

-

Cognitive Complexity and its effect on the code. StackOverflow https://stackoverflow.com/questions/46673399/cognitive-complexity-and-its-effect-on-the-code ↩

-

Autoboxing and Unboxing. Oracle Docs https://docs.oracle.com/javase/tutorial/java/data/autoboxing.html ↩

-

Do not shadow or obscure identifiers in subscopes.https://wiki.sei.cmu.edu/confluence/display/java/DCL51-J.+Do+not+shadow+or+obscure+identifiers+in+subscopes ↩