Two weeks ago, we took a stab at exploring and identifying the architectural elements and principles that underlie Next.js, a modern web framework for building React applications. Together these elements and principles realize the set of fundamental concepts and properties that form Next.js in its environment. If you haven’t done so yet, make sure to also read our first post on the vision of Next.js, in which we go more into depth into the foundations of its architecture.

Software architecture is however not only relevant during a system’s construction and establishment, but during its entire lifetime. Software systems are like living organisms: alive and constantly evolving over time as their environmental factors change1. And like living organisms, as they attempt to adapt to new environments, they will inevitably acquire alterations to their architecture. In biological systems, this happens at random, but only the ones that can properly adapt survive. Fortunately, for software systems this scenario is more optimistic since we are (mostly) in control of the changes to our systems. Yet, we must be careful not to accumulate technical debt, which arises when cutting corners during development. While technical debt is not inherently bad, too much of it will lead to decay of the system, threatening architectural integrity and hindering future changes.

Thus, our analysis of Next.js’ architecture is not complete without also including in our analysis a discussion about the processes that safeguard the project’s quality and architectural integrity, such that its maintainers can anticipate future changes. This aspect is especially important for a project like Next.js because it is a framework for building (web) applications. After all, how can expect developers to uphold the quality of their Next.js application, if the maintainers of Next.js fail to do them themselves.

Hence, today, we will put Next.js’ codebase under the magnifying glass and asses whether the maintainers of Next.js have properly upheld the quality standards and architectural integrity that the project strives for. We will do this in four parts:

- The Strategy: We identify and analyse the processes and methods put in place by the maintainers of Next.js to safeguard the quality and architectural integrity of the project.

- The Evidence: We asses the effectiveness of this strategy by evaluating Next.js’ codebase from various aspects, such as code complexity, hotspots or test adequacy.

- The Prospects: We attempt to understand how future changes (on the roadmap) could affect architectural components of the project and discuss how we can anticipate these changes.

- The Verdict: We formulate our overall judgment of the quality of the project and conclude our series of essays.

The Strategy

To safeguard quality and architectural integrity, software projects often rely on some form of software quality process, in which they establish what constitutes quality in its context and how its maintainers intend to reach the quality objectives. Some projects (such as Material-UI2) do this by defining explicitly in the contributing guide what they expect from contributions, such as Git conventions, documentation, code formatting or tests. However, for many projects, this is not the case and instead they rely on some implicit definition of the software quality process on which the maintainers agree.

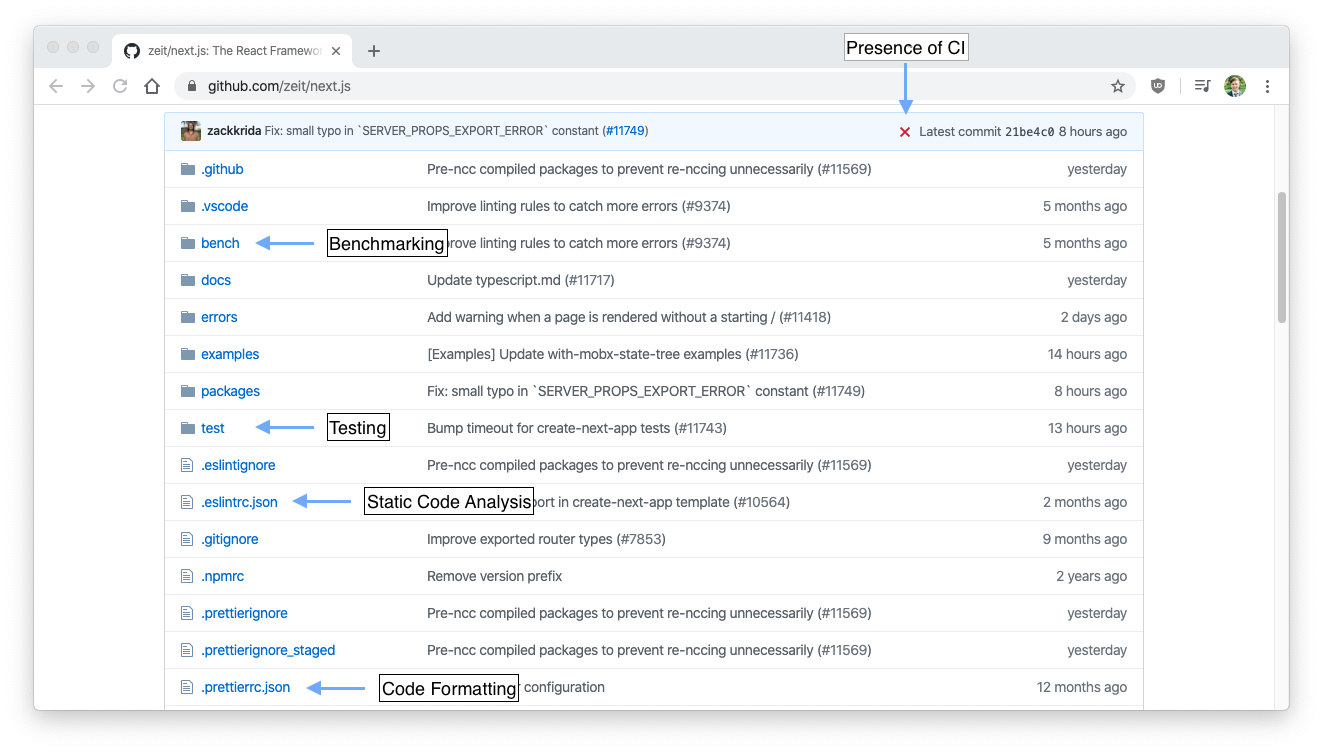

This holds for Next.js as well. While Next.js does have a contributing guide, it unfortunately goes into little detail about what the maintainers expect from (external) contributions in terms of quality. Discussion about quality and architectural choices is rather limited in the public repository. There are a few issues345 and pull requests3 that offer some words on the quality and architectural choices of Next.js. However, we expect that most of the discussions are held internally6. This does not mean that Next.js does not have software quality process in place. If we scan the Github repository for a bit (see figure), we already observe several hints that Next.js in fact does have some sort of quality process in place.

Figure: Next.js repository hinting the existence of a software quality process

At a high level, Next.js works with a concept called canary releases (in addition to stable releases). Canary releases always represent the latest changes of the project and give the users the opportunity to experiment with new (and experimental) functionality, while still trying to be backwards compatible and as stable as possible7. Consequently, this allows maintainers to test and gather feedback on changes before they are introduced in the next stable release.

In between these canary releases, Next.js has several processes in place to safeguard the quality of the project. Here, we distinguish between processes that need to be performed manually by the maintainers or external developers, and processes that are performed automatically with each commit, pull request or release.

Manual Processes

Development of Next.js is organized using a GitHub workflow8,

where contributors may propose changes to the repository by means of a pull

request on top of the canary branch. Before the changes may be merged into

the repository, a maintainer must first approve the changes. This mostly holds

for maintainers as well, but this does not seem to be a strict

requirement9.

Whether the maintainers do a full code review before approval is not clear. There are some instances where a maintainer requests changes to the pull request10, but these pull requests represent less than 5% of the total11.

Moreover, the contributing guide of Next.js does not outline testing requirements for contributions. While Next.js does have an extensive test suite, it is mostly developed by the maintainers themselves with only a few cases of external contributors contributing tests12. This can be explained by the fact that external contributors mostly contribute to the Next.js examples, which do not require tests. Though, the maintainers are certainly appreciative of test cases in pull requests13.

Automated Processes

In addition to the manual work, Next.js runs several automated processes to safeguard the quality of the project. GitHub Actions and Azure Pipelines are used to automatically manage and run the following processes for each pull request:

- An extensive test harness with over 150 in-browser end-to-end test suites.

- Static Code Analysis using ESLint to detect common mistakes and code smells.

- Bundle Size check14, to determine impact of the contribution on the size of the build artifacts.

- In case of a release, automatically deploy to the appropriate distribution channels.

In addition to running these processes online in CI/CD environments, Next.js will, before a developer can commit a change, already run static code analysis and format the code according to Next.js’ coding style using Prettier.

The Evidence

A strategy is not very useful if it is not reflected in the actual product. So, let’s now dive into the codebase of Next.js and evaluate the effectiveness of their strategy. For this, we will attempt to quantify several desirable characteristics associated with software quality in the Next.js.

A starting point could be to measure the amount of tests in the codebase. Next.js has an extensive test harness, consisting of various types of tests. However, the majority of tests are actually end-to-end tests, with over 150 of such test suites. What is more important is how well the tests cover the codebase. While Next.js did at one point in time track test coverage, they removed it as the coverage was wrong due to internal files being bundled. Moreover, the maintainers argue that since the tests are mostly end-to-end and the confidence in their test harness is very high, test coverage is nice to have rather than being extremely useful for the project15.

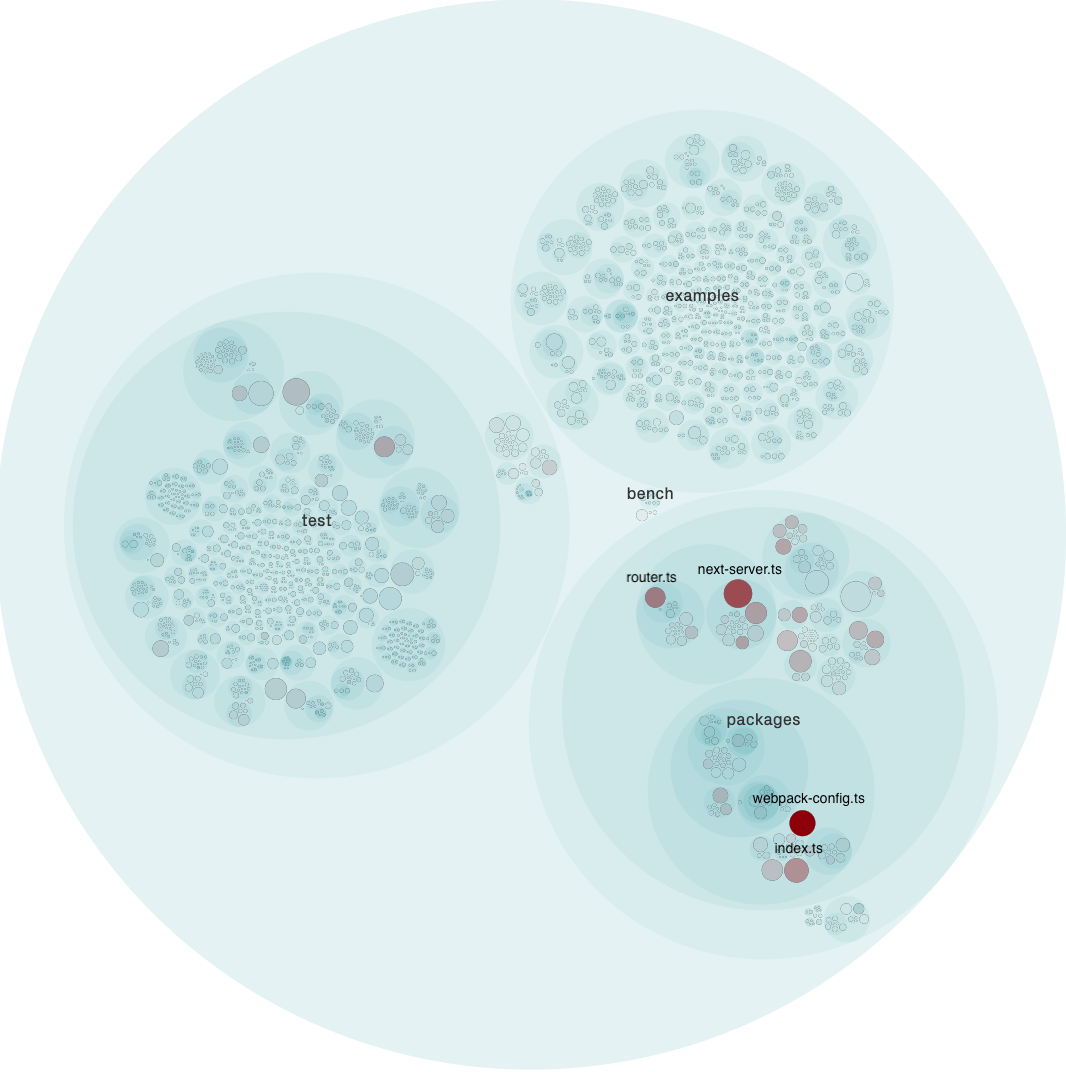

Figure: Hotspots in the codebase identified by CodeScene

We could also utilize static code analysis tools to identify bugs or code

smells. However, before we do that, let’s first focus attention on the parts of

the code that require the most attention, which are the parts with the most

development activity. Usually, this code is complicated and susceptible to

bugs. As seen in the above figure, for Next.js, most development happens in

next-server.ts which contains the main server logic and webpack-config.ts

which builds the configuration for the webpack

module bundler.

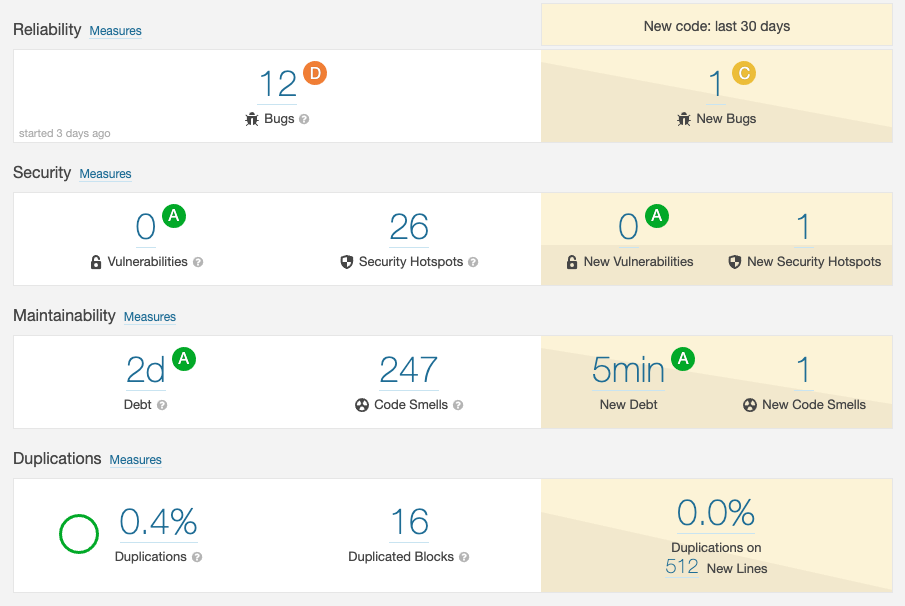

Figure: Technical Debt measured by SonarQube

If we now turn to static code analysis tools, SonarQube in this case, we find that the Next.js codebase is in good condition, as depicted in the figure above, passing all quality characteristics with an A, except for reliability, which scored a D due to a critical bug detected. In a similar fashion, code analysis by SIG shows a maintainability of 3.9 out of 5 stars, where Next.js mainly scores low on unit size and unit complexity.

While these initial results look promising, still, we observe the presence of bugs, security hotspots and code smells in the analysis. However, upon closer inspection of the results, we find that some of the issues are not applicable to Next.js or are false positives. For example, the security hotspots are caused by the use of regular expressions, which may pose a security risk on untrusted input. This is however not the case for Next.js. Nonetheless, the analysis still contains some valid issues which should be addressed by the maintainers:

The Prospects

Quality control is not only about safeguarding what we already have, but also about anticipating what is to come. Like we said before, software is alive and constantly evolving. It is important that we take into account the changes of tomorrow in the decisions we make today. In our first post on the vision of Next.js, we already wrote about its private roadmap and what high-level functionality we expected to be on this roadmap:

- Improved Static Site Generation (SSG)18

- Support for React Concurrent mode and streaming rendering19

- Support for partial hydration20

As of writing this post, Next.js has actually released version 9.3 featuring next generation Static Site Generation support21. While being fully backwards compatible, this feature required significant additions to Next.js API surface and changes to Next.js internals. From the initial commit22, we see that the changes are mostly concentrated in the build module (for building Next.js applications) and server module, with a small change needed in the client module.

More of a challenge is adding support for React Concurrent mode and streaming rendering in Next.js. Traditionally, rendering the page on the server required a blocking call using React (in a single-threaded Node.js environment) and consequently many developers kept using global state in their components. However, with the introduction of React Concurrent mode, React is now able to render multiple pages concurrently in a single thread. While this has improved overall throughput tremendously, it presents a challenge for those using global state in their components. Next.js also suffers from this problem, most importantly in the next/head module, for which solutions are now being discussed23. We expect mostly the server and client module to be affected by this feature.

Adding support for partial hydration would be a great addition to Next.js, as also acknowledged by the maintainers20. However, it is difficult to estimate the impact of this functionality on the codebase as the maintainers have yet to decide how they wish to shape this functionality. Nonetheless, we expect this feature to require a new API interface for the user to specify what part of a page should be rendered on the server and what on the client, in addition to some significant changes in the server to able to recognize this and partially defer rendering to the client.

The Verdict

In this post, we have seen the various processes in use by Next.js to maintain the quality of the project and support its architectural integrity. We have assessed the effectiveness of Next.js’ strategy and have analyzed where the maintainers’ approach seem to be lacking and where it is functioning well. Finally, we have tried to anticipate future changes and how these changes may affect the architectural components of Next.js. All together, these provide very valuable insights of the architecture of Next.js

Overall, we find that the Next.js project is well-maintained and of good quality. Nonetheless, there are still some areas where Next.js could improve. For one, we would like to see the maintainers to be more open about its code quality standards to external contributors, by for example having a more extensive contributing guide. Moreover, while we understand the technical difficulties, we would like to see test coverage being utilized as to prevent new changes from being left untested unintentionally.

-

N. Sussman, A software system is a living organism. Blog, 2015 ↩

-

https://github.com/mui-org/material-ui/blob/master/CONTRIBUTING.md ↩

-

Tim Neutkens and Arunoda Susiripala, Towards Next.js 5: Introducing Canary Updates. Blog, 2017 ↩

-

Github, Understanding the GitHub flow. GitHub Guides, 2017 ↩

-

https://github.com/zeit/next.js/pulls?page=2&q=is%3Apr+review%3Achanges-requested+is%3Aclosed ↩

-

https://github.com/zeit/next.js/pull/10592#pullrequestreview-388408075 ↩

-

https://github.com/zeit/next.js/pull/10592#issuecomment-588221211 ↩

-

https://sonarcloud.io/project/issues?id=fabianishere_next.js&issues=AXFQ1QI1kkKt-l-TInlI&open=AXFQ1QI1kkKt-l-TInlI ↩

-

https://sonarcloud.io/project/issues?id=fabianishere_next.js&issues=AXFQ1QGUkkKt-l-TInj-&open=AXFQ1QGUkkKt-l-TInj- ↩

-

https://github.com/zeit/next.js/discussions/10741#discussioncomment-745 ↩

-

Tim Neutkens et al., Next.js 9.3. Blog, 2020 ↩

-

https://github.com/zeit/next.js/commit/c24daa21722fadfce2d1d561ce65ecc0efa6a7ea ↩