Software quality plays an important role in an open-source library that is being used in software projects worldwide. It helps in keeping the code maintainable and the releases stable. In this third part of our essay series about the NumPy project we will have a look at the software quality of the NumPy source code and how this quality is assured by the developers of the project.

We start out by looking at the software quality assurance process in general and the steps that are taken in this process. Then, we have a look at the testing process that is implemented in NumPy. After that, we compare the coding activity and roadmap to the the actual architectural components. Finally, we have a look at the improvements which are proposed by the Software Improvement group (SIG) and if there is any technical debt.

NOTE: The formatting in this essay has been optimised for the online version.

- Software Quality Assurance

- Continuous Integration

- Testing

- Coding Activity in Architectural Components

- Evaluation of Code Quality

- Technical Debt

Software Quality Assurance

As described in our first essay the NumPy project relies on a large group of developers from around the world for its development. When working with almost 900 contributors1 from around the world on a project, it is import to have some sort of software quality assurance process in place. The development process of contributing to NumPy is described as follows on their website2:

- Select an issue you want to fix and fork the main repository

- Develop the contribution locally

- Push the changes and create a pull request to the main repository

- Now you pull request will be reviewed by one of the core developers

- If any changes are requested, these should be applied before the pull request is approved

- In addition, several continuous integration (CI) checks are performed, these are further described below

- If all changes are approved and the CI passes, the pull request will be merged to the NumPy master branch

All these steps together ensure that new contributions are checked and reviewed by the core developers before they are merged to the master. In addition, the CI helps with checking the code. By performing all these checks before merging to master, the quality of the source code of NumPy is maintained.

Continuous Integration

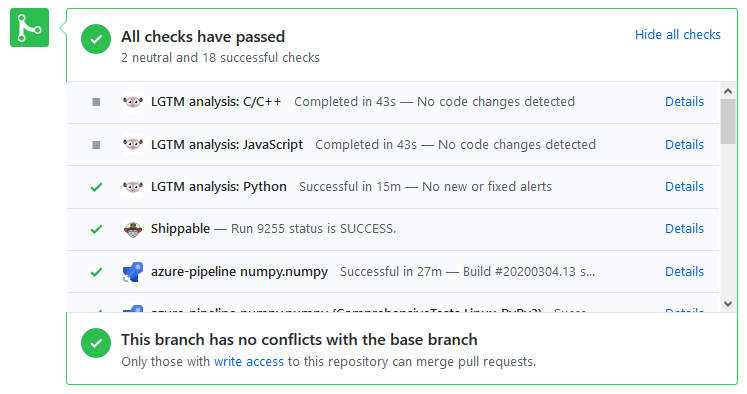

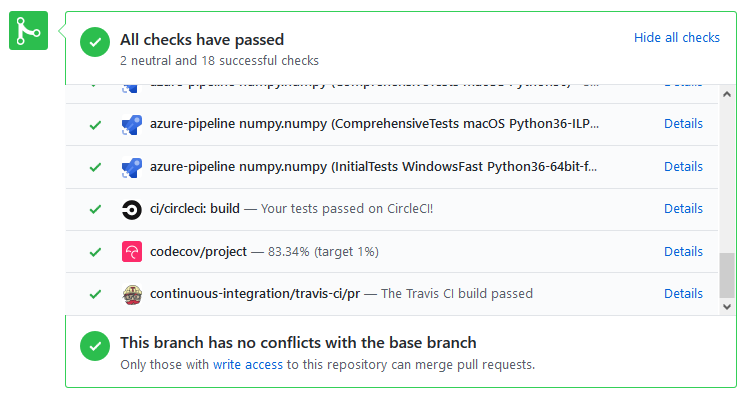

To test the source code, multiple CI services are used, such as Travis CI, Azure pipelines, CircleCI, LGTM and Codecov. These are configured to run all tests on different platforms: Windows, Linux and MacOS are used for CI3. Different versions of Python, ranging from Python 3.5 to 3.8 are used for running the entire test suite4. NumPy also uses Codecov as a service to keep track of test coverage and changes. LGTM is used as a code analysis tool that runs security analyses 5. This is very useful for NumPy as there is a lot of C code (just over 50% of the entire codebase). C code is relatively prone to security vulnerabilities, therefore making this a very valuable tool.

The CI is run for every PR. On the PR page there is a clear overview of the results of these CI pipelines including checks:

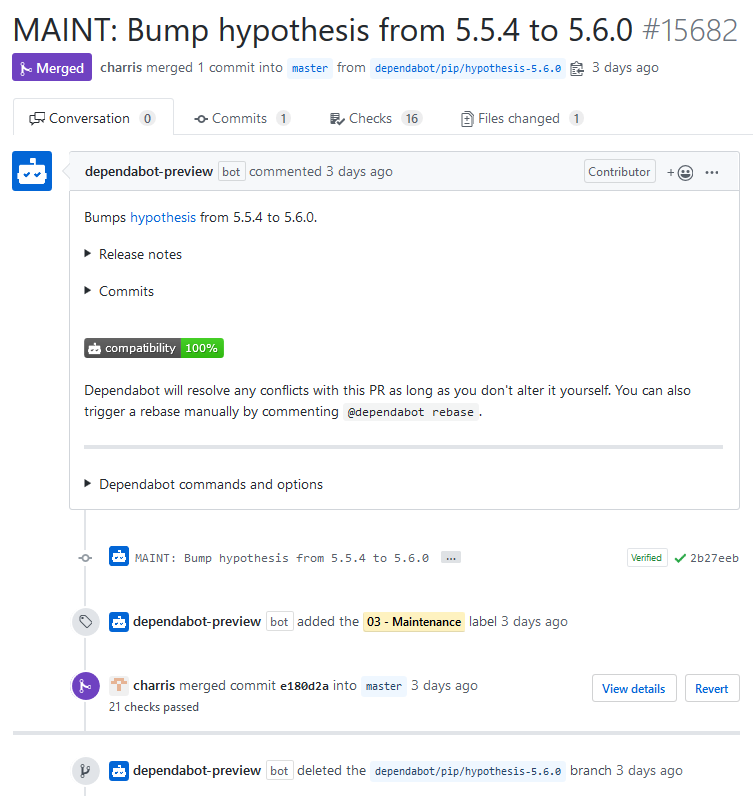

There are also other tools used which are not directly visible in the PR checks. One of these tools is the dependabot6. This bot is configured to check the dependencies used by NumPy and bumps the versions if there is a new one available. This is important for security updates that otherwise could be missed by the maintainers. By using this automated system the maintainers can focus on the product itself without having to worry about checking the security vulnerabilties of all their dependencies every day. The dependabot can be seen in action below, the whole PR can be found here.

The CI is very extensive, as there are many jobs that run for just one PR. However, this does not come without its cons. The largest disadvantage is that the CI is flaky, our experience in submitting PRs to the NumPy repository was not ideal as jobs would fail and users cannot retrigger specific jobs as this would probably overload the CI pipelines. The second disadvantage was the long time it takes to run the CI. It takes around 10-15 minutes for the entire CI pipeline to complete, which discourages making small commits.

Testing

NumPy makes use of an extensive test suite that, according to its own website, aims to achieve that every module and package is unit tested thoroughly7. This means that every NumPy module has its own suite of unit test. The goal of these unit tests is specified as follows:

“These tests should exercise the full functionality of a given routine as well as its robustness to erroneous or unexpected input arguments.”

To accomplish this level code testing, NumPy asks its contributors to write code in a test-driven-development fashion. To run the tests, the NumPy source code should be pulled and the necessary dependencies should be installed. Then, the command python3 -v -t runtests.py --coverage should be run. We ran the test suite on the most recent version of NumPy, which includes our contribution, and got the following output:

The output results are listed in a generated HTML file containing coverage information of all different modules. The coverage results of NumPy as a whole are displayed below.

NumPy does not perform any tests other than unit tests, which is understandable because NumPy is merely a library containing tools for computations on arrays and not a system with components that interact intensively.

Coding Activity in Architectural Components

Hotspots

Hotspots are a good indicator of main activity in your project. According to Tornhill, in his book about software, there is a strong correlation between hotspots, maintenance costs, and software defects8. Therefore, it is a good idea to focus on hotspots to discover potential problems in the code or even the architecture itself.

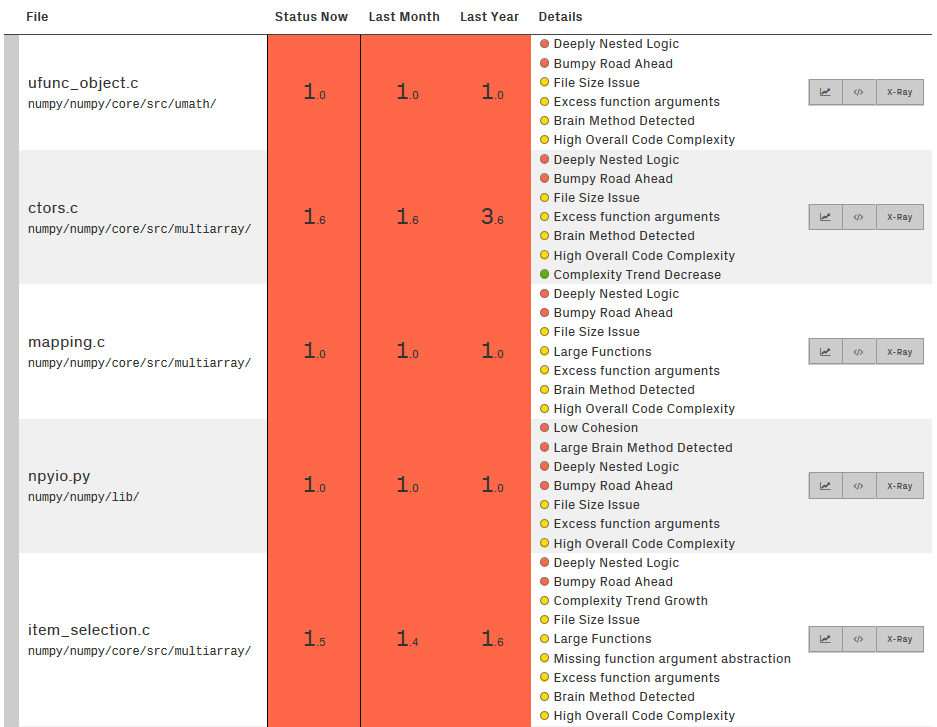

In order to detect development hotspots in the repository we used CodeScene. CodeScene is a company that analyses source code from git repositories to detect a wide range of metrics from several categories. For example: technical debt, hotspots, code health, coupling and complexity. Hotspots, which is what we are interested in specifically, are determined using the frequency of code changes in that file over a period of time9. The results of the analysis can be seen below. In the figure is a list with source files, starting with the file that is changed most frequently. The coloured status columns in the table denote The Code Health metric which ranges from 1 (code with severe quality issues) to 10 (healthy code that is relatively easy to understand and evolve)10. We will come back to this further on.

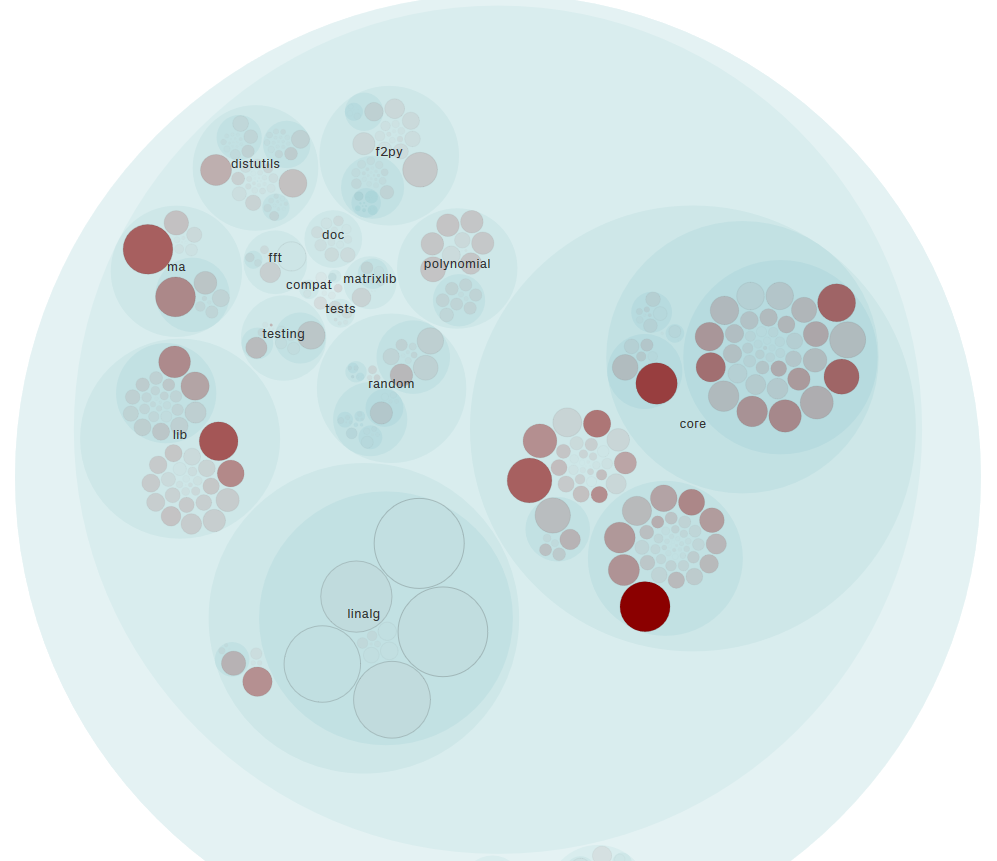

To get a better view of where these files reside in the project there is another figure below which show the files that are changed most frequently inside their folder structure. This shows that the files that are changed the most are in the core, lib, and ma modules. The core module is evidently the most active module, as the 4 out of 5 files in the top 5 files are in the core module.

Roadmap

As has become clear from previous parts of this essay, NumPy’s hotspots are mostly placed in its core module. This is not surprising, because NumPy’s core module houses the implementation of its array object which is the building block for all of NumPy’s other functionality. Therefore, it is also not surprising that most of NumPy’s major roadmap items pertain to this core component. The following descriptions were copied from our previous essay and modified to indicate their respective components11:

- Interoperability

- The NumPy developers want to make it easier for other libraries to interoperate with NumPy. They want to provide better interoperability protocols and better array subclass handling, among other things.

- These features relate to how the array object can be subclassed and how libraries with their own adapted versions of NumPy can cooperate within the same source code. All roadmap items related to interoperability will therefore relate to the core component.

- Extensibility

- The NumPy developers want to simplify NumPy’s internal datatypes (

dtypes) to simplify extending Numpy’s functionality. For instance, they want to simplify the reation of custom dtypes and want to add new ‘string’ dtypes for dealing with textual data. - As with interoperability, these roadmap items deal with extending or modifying NumPy’s internal datatypes, which are housed in its core component.

- The NumPy developers want to simplify NumPy’s internal datatypes (

- Performance

- The NumPy developers want to improve NumPy’s performance through for instance SIMD instructions and optimisations within functions.

- These performance features will be implemented using low-level code, and not Python, so they too will relate to the core component.

- Website and documentation

- The website needs to be rewritten completely and the documentation is of ‘varying quality’12.

- The website is not part of the NumPy library and does not belong to any component.

- Random number generation policy & rewrite

- At the time of writing, the developers are close to completing a new random number generation framework.

- Random number generation does have its own component, called ‘random’. This is where random number generation frameworks are added as well.

Evaluation of Code Quality

As mentioned before, we have used CodeScene to analyse the code of NumPy. In addition, we have access to the analysis that has been made by the SIG. We will now look at the outcomes of these analyses and possible areas for improvement.

CodeScene Analysis

As can be seen from the CodeScene analysis before, NumPy scores very poorly on The Code Health metric of CodeScene. CodeScene indicates that most files have: deeply nested logic, large file and function sizes, high complexity and contain brain methods, which are functions with too much important behaviour. All these flags that are raised by CodeScene can be attributed to the fact that NumPy simply provides a lot of different functions for working with arrays. The library contains files which consist of different functions that can be used to manipulate and work with arrays. Therefore, we do not consider the raised warnings as problems to the source code.

Software Improvement Group Analysis

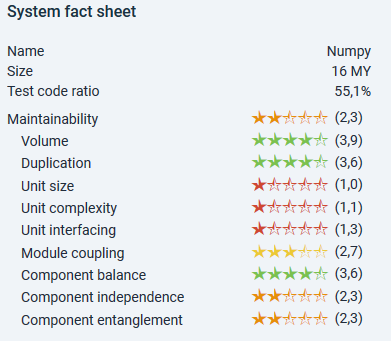

Through their portal Sigrid, the SIG has provided us with insightful metrics about the NumPy repository.

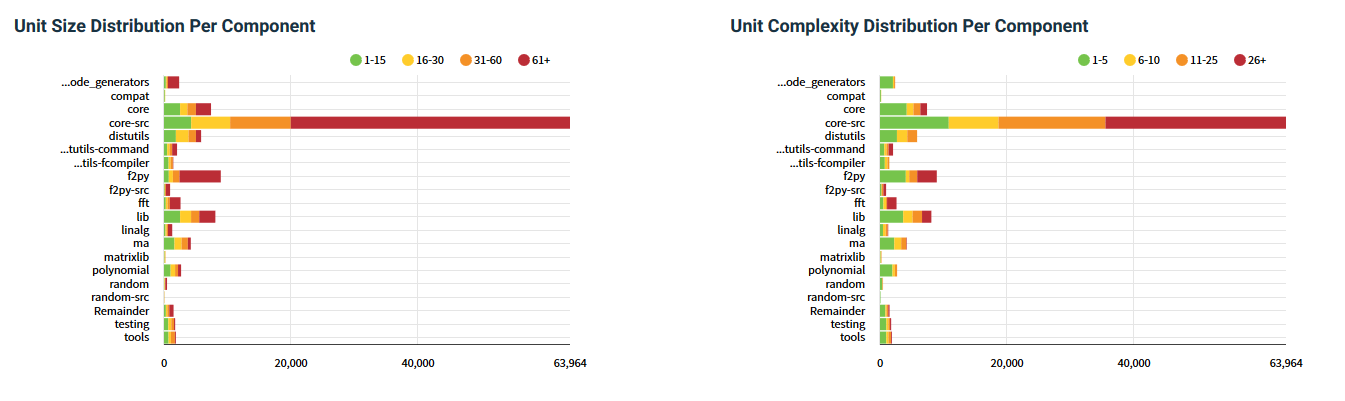

The system fact sheet provides a high-level overview of how the NumPy repository scores on different metrics defined by the SIG13. It can be seen that NumPy scores relatively well on Volume, Duplication and Component balance. The repository is thus not too large, avoids duplication and splits its code well into different components, in accordance with their architectural style which is component-based. You can read more about this in our previous essay.

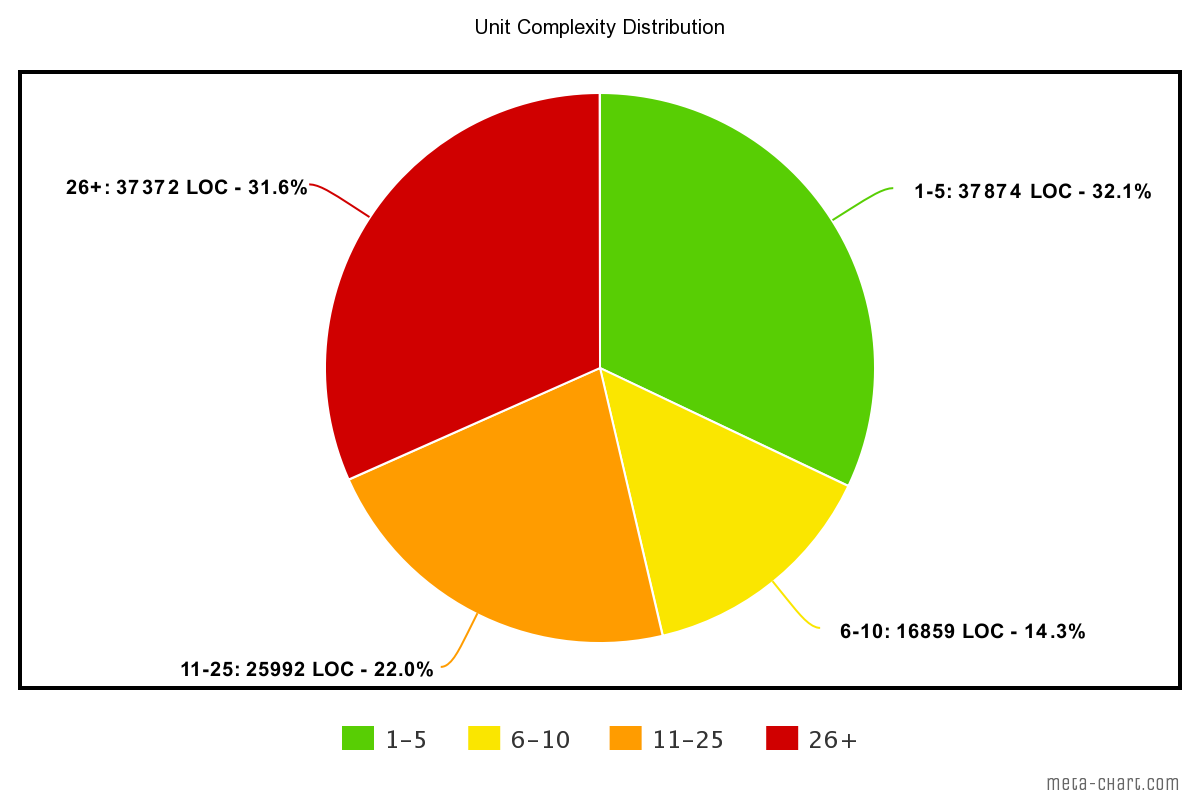

On the other side, just like CodeScene, the SIG rates the Unit size, Unit complexity and Unit interfacing as quite poor. This can again be contributed to the fact that NumPy simply provides a whole lot of different functions as mentioned before.

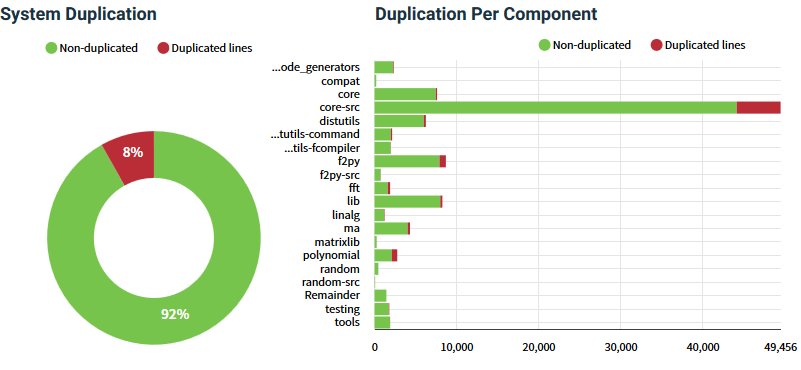

Below, some more statistics can be found which have been provided by the SIG. As can be seen, NumPy scores well on code duplication, with only 8% of duplicated code. Most of this resides in the core-src library and are probably boilerplate C code.

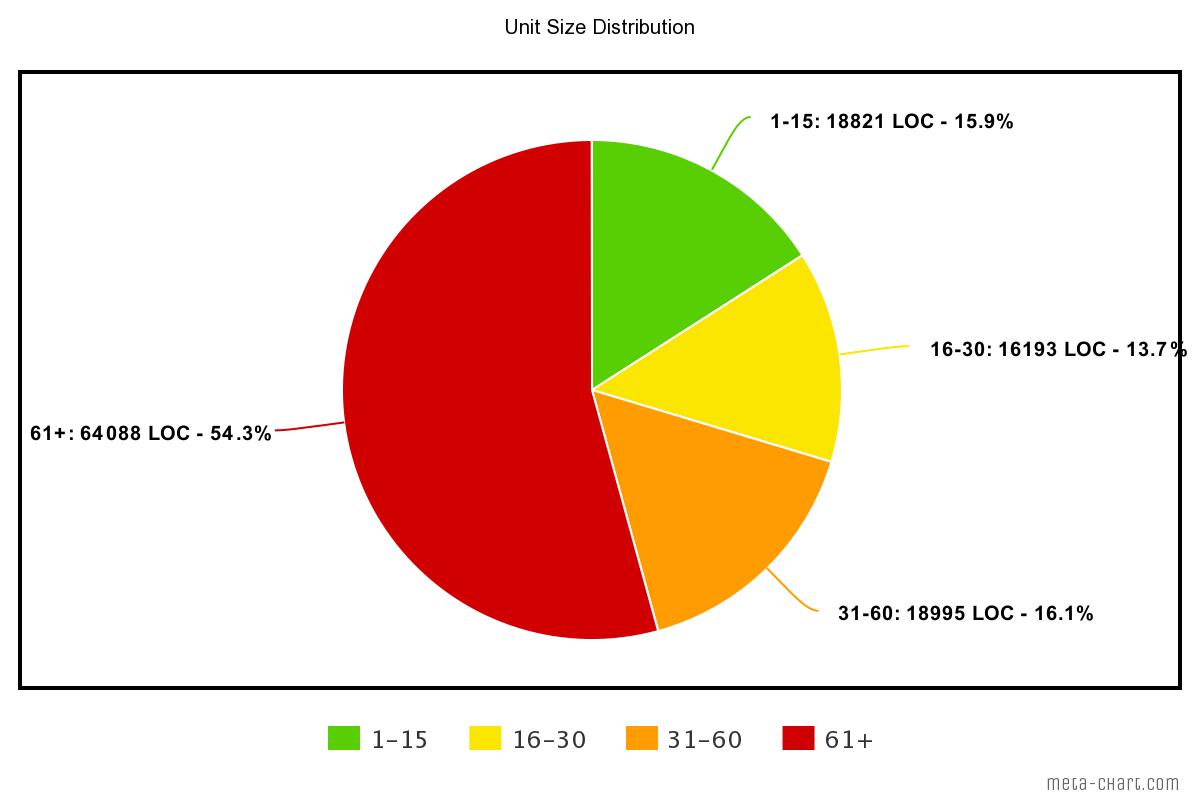

NumPy scores clearly poor on unit size and complexity, with respectively 16% and 32% of the code falling within the acceptable ranges. The unit size and complexity are not scoring well across the whole system as can be seen in the bar graphs.

Technical Debt

According to Martin Fowler technical debt in a system is a deficiency, or as he calls it, cruft in quality which makes it harder to change the system14. In this section we will discuss occurrences of technical debt in the NumPy project.

The SIG provides a large number of refactoring candidates through Sigrid. These are however almost all related to unit size or complexity as NumPy scores poorly in these areas. If these refactorings would actually be done, a lot of functions would be moved to separate files, making the repository a lot less structured and much more unclear.

Other technical debt that we came across were quite some lines of legacy codes. This is is however inherent to a library that provides functionality for many different versions of Python. With the removal of support for Python 2.7, a lot of this legacy code has already been taken care of however.

Conclusion

Being an open-source project, the NumPy project has multiple checks in place for assuring code quality. These involve manual checks by core developers but also quite some CI checks for different platforms and versions.

The repository does a good job in following its component-based architecture, but contains many large units with high complexity. This can be attributed to the fact that NumPy simply provides a whole lot of different functions to work with arrays. These functions usually work by themselves and are grouped by category through the separation in different components.

-

NumPy GitHub, retrieved on 2020-03-26. https://github.com/numpy/numpy ↩

-

Contributing to NumPy, retrieved on 2020-03-26. https://numpy.org/devdocs/dev/index.html ↩

-

Azure pipelines config. https://github.com/numpy/numpy/blob/master/azure-pipelines.yml ↩

-

Travis CI config. https://github.com/numpy/numpy/blob/master/.travis.yml ↩

-

LGTM GitHub page. https://github.com/marketplace/lgtm ↩

-

Dependabot config. https://github.com/numpy/numpy/blob/master/.dependabot/config.yml ↩

-

NumPy testing guidelines, retrieved on 2020-03-26. https://docs.scipy.org/doc/numpy/reference/testing.html ↩

-

Tornhill, A. (2015). Your code as a crime scene: use forensic techniques to arrest defects, bottlenecks, and bad design in your programs. Dallas, TX: The Pragmatic Bookshelf. ↩

-

Hotspots documentation from CodeScene. https://codescene.io/docs/guides/technical/hotspots.html ↩

-

Code Health documentation from CodeScene, retrieved on 2020-03-26. https://codescene.io/docs/guides/technical/biomarkers.html ↩

-

NumPy’s roadmap, retrieved on 2020-03-26. https://numpy.org/neps/roadmap.html ↩

-

Section about website and documentation of NumPy’s roadmap, retrieved on 2020-03-05. https://numpy.org/neps/roadmap.html#website-and-documentation ↩

-

Software Improvement Group user manual, retrieved 2020-03-26. https://sigrid-says.com/assets/sigrid_user_manual_20191224.pdf ↩

-

Martin Fowler: Technical Debt. https://www.martinfowler.com/bliki/TechnicalDebt.html ↩